Nvidia May Move to Yearly GPU Architecture Releases

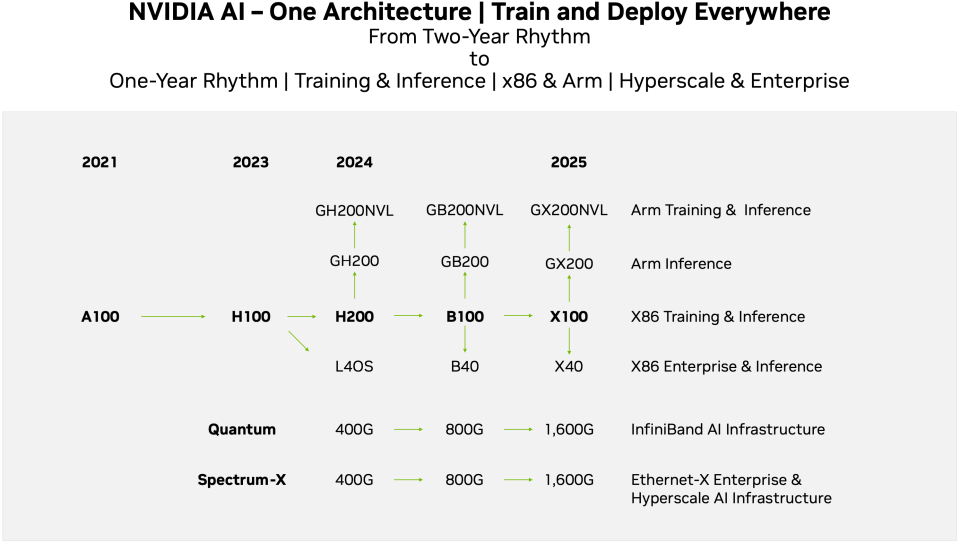

In a bid to maintain its leadership in artificial intelligence (AI) and high-performance computing (HPC) hardware, Nvidia plans to speed up development of new GPU architectures and essentially get back to its one-year cadence for product introductions, according to its roadmap published for investors and further explained by SemiAnalysis. As a result, Nvidia's Blackwell will come in 2024 and will be succeeded by a new architecture in 2025.

But before Blackwell arrives next year (presumably in the second half of next year), Nvidia is set to roll out multiple new products based on its Hopper architecture. This includes the H200 product, which might be a re-spin of the H100 made for enhanced yields, or just higher performance, as well as GH200NVL, which will address training and inference on large language models with an Arm-based CPU and Hopper-based GPU. These are set to come rather sooner than later.

As for the Blackwell family due in 2024, Nvidia seems to prep the B100 product for AI and HPC compute on x86 platforms, which will succeed H100. In addition, the company preps GB200, which is presumably Grace Hopper module featuring an Arm CPU and a Hopper GPU, targeting inference as well as GB200NVL, an Arm-based solution for LLM training and inference. Also, the company is planning B40 product, presumably a client GPU-based solution for AI inference.

In 2025, Blackwell will be succeeded with an architecture designated with the letter X, which is probably a placeholder for now. Anyhow, Nvidia preps X100 for x86 AI training and inference as well as HPC, GX200 for Arm inference (Grace CPU + X GPU), and GX200NVL for Arm-based LLM training and inference. In addition, there will be X40 product — presumably based on a client GPU-based solution — for lower cost inference.

For now, Nvidia leads the market of AI GPUs, but AWS, Google, Microsoft as well as traditional AI and HPC players like AMD and Nvidia are all prepping their new-generation processors for training and inference, which is why Nvidia reportedly accelerated its plans for B100 and X100-based products.

To further solidify its positions further, Nvidia has reportedly pre-purchased TSMC capacity and HBM memory from all three makers. In addition, the company is pushing its HGX and MGX servers in a bid to commoditize these machines and make them popular among end users, particularly in the enterprise AI segment.