Abstract

The use of predictive machine learning algorithms is increasingly common to guide or even take decisions in both public and private settings. Their use is touted by some as a potentially useful method to avoid discriminatory decisions since they are, allegedly, neutral, objective, and can be evaluated in ways no human decisions can. By (fully or partly) outsourcing a decision process to an algorithm, it should allow human organizations to clearly define the parameters of the decision and to, in principle, remove human biases. Yet, in practice, the use of algorithms can still be the source of wrongful discriminatory decisions based on at least three of their features: the data-mining process and the categorizations they rely on can reconduct human biases, their automaticity and predictive design can lead them to rely on wrongful generalizations, and their opaque nature is at odds with democratic requirements. We highlight that the two latter aspects of algorithms and their significance for discrimination are too often overlooked in contemporary literature. Though these problems are not all insurmountable, we argue that it is necessary to clearly define the conditions under which a machine learning decision tool can be used. We identify and propose three main guidelines to properly constrain the deployment of machine learning algorithms in society: algorithms should be vetted to ensure that they do not unduly affect historically marginalized groups; they should not systematically override or replace human decision-making processes; and the decision reached using an algorithm should always be explainable and justifiable.

Similar content being viewed by others

1 Introduction

The use of predictive machine learning algorithms (henceforth ML algorithms) to take decisions or inform a decision-making process in both public and private settings can already be observed and promises to be increasingly common. They are used to decide who should be promoted or fired, who should get a loan or an insurance premium (and at what cost), what publications appear on your social media feed [47, 49] or even to map crime hot spots and to try and predict the risk of recidivism of past offenders [66].Footnote 1 When compared to human decision-makers, ML algorithms could, at least theoretically, present certain advantages, especially when it comes to issues of discrimination. Broadly understood, discrimination refers to either wrongful directly discriminatory treatment or wrongful disparate impact. Roughly, direct discrimination captures cases where a decision is taken based on the belief that a person possesses a certain trait, where this trait should not influence one’s decision [39]. A paradigmatic example of direct discrimination would be to refuse employment to a person on the basis of race, national or ethnic origin, colour, religion, sex, age or mental or physical disability, among other possible grounds. In contrast, disparate impact discrimination, or indirect discrimination, captures cases where a facially neutral rule disproportionally disadvantages a certain group [1, 39]. For instance, to demand a high school diploma for a position where it is not necessary to perform well on the job could be indirectly discriminatory if one can demonstrate that this unduly disadvantages a protected social group [28].

One potential advantage of ML algorithms is that they could, at least theoretically, diminish both types of discrimination. By (fully or partly) outsourcing a decision to an algorithm, the process could become more neutral and objective by removing human biases [8, 13, 37]. This prospect is not only channelled by optimistic developers and organizations which choose to implement ML algorithms.Footnote 2 Despite that the discriminatory aspects and general unfairness of ML algorithms is now widely recognized in academic literature – as will be discussed throughout – some researchers also take the idea that machines may well turn out to be less biased and problematic than humans seriously [33, 37, 38, 58, 59]. Roughly, according to them, algorithms could allow organizations to make decisions more reliable and constant. They could even be used to combat direct discrimination. Moreover, Sunstein et al. [37] maintain that large and inclusive datasets could be used to promote diversity, equality and inclusion. In principle, inclusion of sensitive data like gender or race could be used by algorithms to foster these goals [37]. In this paper, however, we show that this optimism is at best premature, and that extreme caution should be exercised by connecting studies on the potential impacts of ML algorithms with the philosophical literature on discrimination to delve into the question of under what conditions algorithmic discrimination is wrongful.

This paper pursues two main goals. First, we show how the use of algorithms challenges the common, intuitive definition of discrimination. Second, we show how clarifying the question of when algorithmic discrimination is wrongful is essential to answer the question of how the use of algorithms should be regulated in order to be legitimate. To pursue these goals, the paper is divided into four main sections. First, we identify different features commonly associated with the contemporary understanding of discrimination from a philosophical and normative perspective and distinguish between its direct and indirect variants. We then discuss how the use of ML algorithms can be thought as a means to avoid human discrimination in both its forms. Second, we show how ML algorithms can nonetheless be problematic in practice due to at least three of their features: (1) the data-mining process used to train and deploy them and the categorizations they rely on to make their predictions; (2) their automaticity and the generalizations they use; and (3) their opacity. Thirdly, we discuss how these three features can lead to instances of wrongful discrimination in that they can compound existing social and political inequalities, lead to wrongful discriminatory decisions based on problematic generalizations, and disregard democratic requirements. Despite these problems, fourthly and finally, we discuss how the use of ML algorithms could still be acceptable if properly regulated. Consequently, we show that even if we approach the optimistic claims made about the potential uses of ML algorithms with an open mind, they should still be used only under strict regulations.

2 Discrimination, artificial intelligence, and humans

Discrimination is a contested notion that is surprisingly hard to define despite its widespread use in contemporary legal systems. It is commonly accepted that we can distinguish between two types of discrimination: discriminatory treatment, or direct discrimination, and disparate impact, or indirect discrimination.Footnote 3 First, direct discrimination captures the main paradigmatic cases that are intuitively considered to be discriminatory. In a nutshell, there is an instance of direct discrimination when a discriminator treats someone worse than another on the basis of trait P, where P should not influence how one is treated [24, 34, 39, 46]. For many, the main purpose of anti-discriminatory laws is to protect socially salient groupsFootnote 4 from disadvantageous treatment [6, 28, 32, 46]. These include, but are not necessarily limited to, race, national or ethnic origin, colour, religion, sex, age, mental or physical disability, and sexual orientation.Footnote 5

In contrast, disparate impact, or indirect, discrimination obtains when a facially neutral rule discriminates on the basis of some trait Q, but the fact that a person possesses trait P is causally linked to that person being treated in a disadvantageous manner under Q [35, 39, 46]. In plain terms, indirect discrimination aims to capture cases where a rule, policy, or measure is apparently neutral, does not necessarily rely on any bias or intention to discriminate, and yet produces a significant disadvantage for members of a protected group when compared with a cognate group [20, 35, 42].Footnote 6 Accordingly, indirect discrimination highlights that some disadvantageous, discriminatory outcomes can arise even if no person or institution is biased against a socially salient group. Some facially neutral rules may, for instance, indirectly reconduct the effects of previous direct discrimination.

To illustrate, imagine a company that requires a high school diploma to be promoted or hired to well-paid blue-collar positions. Even if the possession of the diploma is not necessary to perform well on the job, the company nonetheless takes it to be a good proxy to identify hard-working candidates. However, it turns out that this requirement overwhelmingly affects a historically disadvantaged racial minority because members of this group are less likely to complete a high school education. Arguably, this case would count as an instance of indirect discrimination even if the company did not intend to disadvantage the racial minority and even if no one in the company has any objectionable mental states such as implicit biases or racist attitudes against the group. What matters here is that an unjustifiable barrier (the high school diploma) disadvantages a socially salient group.Footnote 7

Hence, anti-discrimination laws aim to protect individuals and groups from two standard types of wrongful discrimination. The question of what precisely the wrong-making feature of discrimination is remains contentious [for a summary of these debates, see 4, 5, 1]. However, we can generally say that the prohibition of wrongful direct discrimination aims to ensure that wrongful biases and intentions to discriminate against a socially salient group do not influence the decisions of a person or an institution which is empowered to make official public decisions or who has taken on a public role (i.e. an employer, or someone who provides important goods and services to the public) [46]. Similarly, the prohibition of indirect discrimination is a way to ensure that apparently neutral rules, norms and measures do not further disadvantage historically marginalized groups, unless the rules, norms or measures are necessary to attain a socially valuable goal and that they do not infringe upon protected rights more than they need to [35, 39, 42].Footnote 8

2.1 Using algorithms to combat discrimination

The inclusion of algorithms in decision-making processes can be advantageous for many reasons. Two aspects are worth emphasizing here: optimization and standardization. One goal of automation is usually “optimization” understood as efficiency gains. The objective is often to speed up a particular decision mechanism by processing cases more rapidly. Moreover, this is often made possible through standardization and by removing human subjectivity. The use of algorithms can ensure that a decision is reached quickly and in a reliable manner by following a predefined, standardized procedure. For instance, the use of ML algorithm to improve hospital management by predicting patient queues, optimizing scheduling and thus generally improving workflow can in principle be justified by these two goals [50].

Even though fairness is overwhelmingly not the primary motivation for automating decision-making and that it can be in conflict with optimization and efficiency—thus creating a real threat of trade-offs and of sacrificing fairness in the name of efficiency—many authors contend that algorithms nonetheless hold some potential to combat wrongful discrimination in both its direct and indirect forms [33, 37, 38, 58, 59]. Against direct discrimination, (fully or party) outsourcing a decision-making process could ensure that a decision is taken on the basis of justifiable criteria. By definition, an algorithm does not have interests of its own; ML algorithms in particular function on the basis of observed correlations [13, 66]. Hence, some authors argue that ML algorithms are not necessarily discriminatory and could even serve anti-discriminatory purposes.

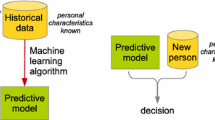

In their work, Kleinberg et al. [37] have particularly systematized this argument. They identify at least three reasons in support this theoretical conclusion. Before we consider their reasons, however, it is relevant to sketch how ML algorithms work. Take the case of “screening algorithms”, i.e., algorithms used to decide which person is likely to produce particular outcomes—like maximizing an enterprise’s revenues, who is at high flight risk after receiving a subpoena, or which college applicants have high academic potential [37, 38]. In these cases, an algorithm is used to provide predictions about an individual based on observed correlations within a pre-given dataset. This predictive process relies on two distinct algorithms: “one algorithm (the ‘screener’) that for every potential applicant produces an evaluative score (such as an estimate of future performance); and another algorithm (‘the trainer’) that uses data to produce the screener that best optimizes some objective function” [37]. Notice that though humans intervene to provide the objectives to the trainer, the screener itself is a product of another algorithm (this plays an important role to make sense of the claim that these predictive algorithms are unexplainable—but more on that later).Footnote 9

Kleinberg et al. fully recognize that we should not assume that ML algorithms are objective since they can be biased by different factors—discussed in more details below. Yet, they argue that the use of ML algorithms can be useful to combat discrimination. First, given that the actual reasons behind a human decision are sometimes hidden to the very person taking a decision—since they often rely on intuitions and other non-conscious cognitive processes—adding an algorithm in the decision loop can be a way to ensure that it is informed by clearly defined and justifiable variables and objectives [; see also 33, 37, 60]. If it turns out that the screener reaches discriminatory decisions, it can be possible, to some extent, to ponder if the outcome(s) the trainer aims to maximize is appropriate or to ask if the data used to train the algorithms was representative of the target population. Consequently, the use of these tools may allow for an increased level of scrutiny, which is itself a valuable addition.

For instance, we could imagine a screener designed to predict the revenues which will likely be generated by a salesperson in the future. The algorithm provides an input that enables an employer to hire the person who is likely to generate the highest revenues over time. If it turns out that the algorithm is discriminatory, instead of trying to infer the thought process of the employer, we can look directly at the trainer. Using an algorithm can in principle allow us to "disaggregate" the decision more easily than a human decision: to some extent, we can isolate the different predictive variables considered and evaluate whether the algorithm was given “an appropriate outcome to predict.” As Kleinberg et al. write: “it should be emphasized that the ability even to ask this question is a luxury” [; see also 37, 38, 59]. Hence, if the algorithm in the present example is discriminatory, we can ask whether it considers gender, race, or another social category, and how it uses this information, or if the search for revenues should be balanced against other objectives, such as having a diverse staff. Consequently, the use of algorithms could be used to de-bias decision-making: the algorithm itself has no hidden agenda. It simply gives predictors maximizing a predefined outcome.

Second, it also becomes possible to precisely quantify the different trade-offs one is willing to accept. For instance, one could aim to eliminate disparate impact as much as possible without sacrificing unacceptable levels of productivity. Consider the following scenario that Kleinberg et al. [37] introduce:

A state government uses an algorithm to screen entry-level budget analysts. The algorithm gives a preference to applicants from the most prestigious colleges and universities, because those applicants have done best in the past. The preference has a disproportionate adverse effect on African-American applicants.

This may amount to an instance of indirect discrimination. However, recall that for something to be indirectly discriminatory, we have to ask three questions: (1) does the process have a disparate impact on a socially salient group despite being facially neutral?; (2) Are the aims of the process legitimate and aligned with the goals of a socially valuable institution?; and (3) Does it infringe upon protected rights more than necessary to attain this legitimate goal? Accordingly, this shows how this case may be more complex than it appears: it is warranted to choose the applicants who will do a better job, yet, this process infringes on the right of African-American applicants to have equal employment opportunities by using a very imperfect—and perhaps even dubious—proxy (i.e., having a degree from a prestigious university). Is the measure nonetheless acceptable?

This question is the same as the one that would arise if only human decision-makers were involved but resorting to algorithms could prove useful in this case because it allows for a quantification of the disparate impact. Algorithms could be used to produce different scores balancing productivity and inclusion to mitigate the expected impact on socially salient groups [37]. In the case at hand, this may empower humans “to answer exactly the question, ‘What is the magnitude of the disparate impact, and what would be the cost of eliminating or reducing it?’” [37]. Therefore, the use of algorithms could allow us to try out different combinations of predictive variables and to better balance the goals we aim for, including productivity maximization and respect for the equal rights of applicants.

Thirdly, and finally, it is possible to imagine algorithms designed to promote equity, diversity and inclusion. In principle, sensitive data like race or gender could be used to maximize the inclusiveness of algorithmic decisions and could even correct human biases. As Kleinberg et al. point out, it is at least theoretically possible to design algorithms to foster inclusion and fairness. This could be done by giving an algorithm access to sensitive data. From there, a ML algorithm could foster inclusion and fairness in two ways. First, it could use this data to balance different objectives (like productivity and inclusion), and it could be possible to specify a certain threshold of inclusion. For instance, it is theoretically possible to specify the minimum share of applicants who should come from historically marginalized groups [; see also 37, 38, 59]. This could be included directly into the algorithmic process. Second, it is also possible to imagine algorithms capable of correcting for otherwise hidden human biases [37, 58, 59]. Consider the following scenario: some managers hold unconscious biases against women. Hence, they provide meaningful and accurate assessment of the performance of their male employees but tend to rank women lower than they deserve given their actual job performance [37]. A program is introduced to predict which employee should be promoted to management based on their past performance—e.g. past sales levels—and managers’ ratings. An algorithm that is “gender-blind” would use the managers’ feedback indiscriminately and thus replicate the sexist bias. However, if the program is given access to gender information and is “aware” of this variable, then it could correct the sexist bias by screening out the managers’ inaccurate assessment of women by detecting that these ratings are inaccurate for female workers. This would be impossible if the ML algorithms did not have access to gender information. Hence, the algorithm could prioritize past performance over managerial ratings in the case of female employee because this would be a better predictor of future performance.Footnote 10 As Kleinberg et al. [37] write:

Since the algorithm is tasked with one and only one job – predict the outcome as accurately as possible – and in this case has access to gender, it would on its own choose to use manager ratings to predict outcomes for men but not for women. The consequence would be to mitigate the gender bias in the data.

In short, the use of ML algorithms could in principle address both direct and indirect instances of discrimination in many ways. Theoretically, it could help to ensure that a decision is informed by clearly defined and justifiable variables and objectives; it potentially allows the programmers to identify the trade-offs between the rights of all and the goals pursued; and it could even enable them to identify and mitigate the influence of human biases. Our goal in this paper is not to assess whether these claims are plausible or practically feasible given the performance of state-of-the-art ML algorithms. It is rather to argue that even if we grant that there are plausible advantages, automated decision-making procedures can nonetheless generate discriminatory results.

3 Discriminatory machine-learning algorithms

Despite these potential advantages, ML algorithms can still lead to discriminatory outcomes in practice. Troublingly, this possibility arises from internal features of such algorithms; algorithms can be discriminatory even if we put aside the (very real) possibility that some may use algorithms to camouflage their discriminatory intents [7]. We single out three aspects of ML algorithms that can lead to discrimination: the data-mining process and categorization, their automaticity, and their opacity. In the next section, we flesh out in what ways these features can be wrongful.

3.1 Discrimination by data-mining and categorization

As Barocas and Selbst’s seminal paper on this subject clearly shows [7], there are at least four ways in which the process of data-mining itself and algorithmic categorization can be discriminatory. First, the distinction between target variable and class labels, or classifiers, can introduce some biases in how the algorithm will function. Briefly, target variables are the outcomes of interest—what data miners are looking for—and class labels “divide all possible value of the target variable into mutually exclusive categories” [7]. For instance, to decide if an email is fraudulent—the target variable—an algorithm relies on two class labels: an email either is or is not spam given relatively well-established distinctions. The main problem is that it is not always easy nor straightforward to define the proper target variable, and this is especially so when using evaluative, thus value-laden, terms such as a “good employee” or a “potentially dangerous criminal.” What’s more, the adopted definition may lead to disparate impact discrimination. For instance, being awarded a degree within the shortest time span possible may be a good indicator of the learning skills of a candidate, but it can lead to discrimination against those who were slowed down by mental health problems or extra-academic duties—such as familial obligations. This, in turn, may disproportionately disadvantage certain socially salient groups [7].

Second, as mentioned above, ML algorithms are massively inductive: they learn by being fed a large set of examples of what is spam, what is a good employee, etc. Consequently, the examples used can introduce biases in the algorithm itself. First, the training data can reflect prejudices and present them as valid cases to learn from. Examples of this abound in the literature. For instance, an algorithm used by Amazon discriminated against women because it was trained using CVs from their overwhelmingly male staff—the algorithm “taught” itself to penalize CVs including the word “women” (e.g. “women’s chess club captain”) [17]. Second, data-mining can be problematic when the sample used to train the algorithm is not representative of the target population; the algorithm can thus reach problematic results for members of groups that are over- or under-represented in the sample. For instance, Hewlett-Packard’s facial recognition technology has been shown to struggle to identify darker-skinned subjects because it was trained using white faces. If this computer vision technology were to be used by self-driving cars, it could lead to very worrying results for example by failing to recognize darker-skinned subjects as persons [17]. Hence, in both cases, it can inherit and reproduce past biases and discriminatory behaviours [7].

Thirdly, given that data is necessarily reductive and cannot capture all the aspects of real-world objects or phenomena, organizations or data-miners must “make choices about what attributes they observe and subsequently fold into their analysis” [7]. This type of representation may not be sufficiently fine-grained to capture essential differences and may consequently lead to erroneous results. To go back to an example introduced above, a model could assign great weight to the reputation of the college an applicant has graduated from. However, this reputation does not necessarily reflect the applicant’s effective skills and competencies, and may disadvantage marginalized groups [7, 15].

Fourthly, the use of ML algorithms may lead to discriminatory results because of the proxies chosen by the programmers. This is an especially tricky question given that some criteria may be relevant to maximize some outcome and yet simultaneously disadvantage some socially salient groups [7]. This problem is not particularly new, from the perspective of anti-discrimination law, since it is at the heart of disparate impact discrimination: some criteria may appear neutral and relevant to rank people vis-à-vis some desired outcomes—be it job performance, academic perseverance or other—but these very criteria may be strongly correlated to membership in a socially salient group. By relying on such proxies, the use of ML algorithms may consequently reconduct and reproduce existing social and political inequalities [7]. To illustrate, consider the now well-known COMPAS program, a software used by many courts in the United States to evaluate the risk of recidivism. It uses risk assessment categories including “man with no high school diploma,” “single and don’t have a job,” considers the criminal history of friends and family, and the number of arrests in one’s life, among others predictive clues [; see also 8, 17]. Yet, as Chun points out, “given the over- and under-policing of certain areas within the United States (…) [these data] are arguably proxies for racism, if not race” [17].

Therefore, the data-mining process and the categories used by predictive algorithms can convey biases and lead to discriminatory results which affect socially salient groups even if the algorithm itself, as a mathematical construct, is a priori neutral and only looks for correlations associated with a given outcome. We cannot ignore the fact that human decisions, human goals and societal history all affect what algorithms will find. They cannot be thought as pristine and sealed from past and present social practices.

3.2 Discrimination through automaticity

In addition to the issues raised by data-mining and the creation of classes or categories, two other aspects of ML algorithms should give us pause from the point of view of discrimination. At the risk of sounding trivial, predictive algorithms, by design, aim to inform decision-making by making predictions about particular cases on the basis of observed correlations in large datasets [36, 62]. This is the very process at the heart of the problems highlighted in the previous section: when input, hyperparameters and target labels intersect with existing biases and social inequalities, the predictions made by the machine can compound and maintain them. However, there is a further issue here: this predictive process may be wrongful in itself, even if it does not compound existing inequalities.

The predictive process raises the question of whether it is discriminatory to use observed correlations in a group to guide decision-making for an individual. The very nature of ML algorithms risks reverting to wrongful generalizations to judge particular cases [12, 48]. As Orwat observes: “In the case of prediction algorithms, such as the computation of risk scores in particular, the prediction outcome is not the probable future behaviour or conditions of the persons concerned, but usually an extrapolation of previous ratings of other persons by other persons” [48].

Consider the following scenario: an individual X belongs to a socially salient group—say an indigenous nation in Canada—and has several characteristics in common with persons who tend to recidivate, such as having physical and mental health problems or not holding on to a job for very long. Such outcomes are, of course, connected to the legacy and persistence of colonial norms and practices (see above section). However, in the particular case of X, many indicators also show that she was able to turn her life around and that her life prospects improved. Clearly, given that this is an ethically sensitive decision which has to weigh the complexities of historical injustice, colonialism, and the particular history of X, decisions about her shouldn’t be made simply on the basis of an extrapolation from the scores obtained by the members of the algorithmic group she was put into. Doing so would impose an unjustified disadvantage on her by overly simplifying the case; the judge here needs to consider the specificities of her case. While a human agent can balance group correlations with individual, specific observations, this does not seem possible with the ML algorithms currently used. As we argue in more detail below, this case is discriminatory because using observed group correlations only would fail in treating her as a separate and unique moral agent and impose a wrongful disadvantage on her based on this generalization. Moreover, if observed correlations are constrained by the principle of equal respect for all individual moral agents, this entails that some generalizations could be discriminatory even if they do not affect socially salient groups. If so, it may well be that algorithmic discrimination challenges how we understand the very notion of discrimination.

3.3 Discrimination and opacity

A final issue ensues from the intrinsic opacity of ML algorithms. Though it is possible to scrutinize how an algorithm is constructed to some extent and try to isolate the different predictive variables it uses by experimenting with its behaviour, as Kleinberg et al. argue [38], we can never truly know how these algorithms reach a particular result. This opacity represents a significant hurdle to the identification of discriminatory decisions: in many cases, even the experts who designed the algorithm cannot fully explain how it reached its decision. This opacity of contemporary AI systems is not a bug, but one of their features: increased predictive accuracy comes at the cost of increased opacity. Roughly, contemporary artificial neural networks disaggregate data into a large number of “features” and recognize patterns in the fragmented data through an iterative and self-correcting propagation process rather than trying to emulate logical reasoning [for a more detailed presentation see 12, 14, 16, 41, 45]. As a result, we no longer have access to clear, logical pathways guiding us from the input to the output.

Of course, the algorithmic decisions can still be to some extent scientifically explained, since we can spell out how different types of learning algorithms or computer architectures are designed, analyze data, and “observe” correlations. However, they are opaque and fundamentally unexplainable in the sense that we do not have a clearly identifiable chain of reasons detailing how ML algorithms reach their decisions. Explanations cannot simply be extracted from the innards of the machine [27, 44]. This may not be a problem, however. For instance, it is not necessarily problematic not to know how Spotify generates music recommendations in particular cases. However, AI’s explainability problem raises sensitive ethical questions when automated decisions affect individual rights and wellbeing. Given what was highlighted above and how AI can compound and reproduce existing inequalities or rely on problematic generalizations, the fact that it is unexplainable is a fundamental concern for anti-discrimination law: to explain how a decision was reached is essential to evaluate whether it relies on wrongful discriminatory reasons. We return to this question in more detail below.

4 AI and wrongful discrimination

It follows from Sect. 3 that the very process of using data and classifications along with the automatic nature and opacity of algorithms raise significant concerns from the perspective of anti-discrimination law. Yet, we need to consider under what conditions algorithmic discrimination is wrongful. For instance, the question of whether a statistical generalization is objectionable is context dependent. It seems generally acceptable to impose an age limit (typically either 55 or 60) on commercial airline pilots given the high risks associated with this activity and that age is a sufficiently reliable proxy for a person’s vision, hearing, and reflexes [54]. Hence, not every decision derived from a generalization amounts to wrongful discrimination. The same can be said of opacity. As mentioned, the fact that we do not know how Spotify’s algorithm generates music recommendations hardly seems of significant normative concern. Yet, it would be a different issue if Spotify used its users’ data to choose who should be considered for a job interview. In the following section, we discuss how the three different features of algorithms discussed in the previous section can be said to be wrongfully discriminatory.

4.1 Data, categorization, and historical justice

The first, main worry attached to data use and categorization is that it can compound or reconduct past forms of marginalization. As the work of Barocas and Selbst shows [7], the data used to train ML algorithms can be biased by over- or under-representing some groups, by relying on tendentious example cases, and the categorizers created to sort the data potentially import objectionable subjective judgments. In addition, algorithms can rely on problematic proxies that overwhelmingly affect marginalized social groups. This, interestingly, does not represent a significant challenge for our normative conception of discrimination: many accounts argue that disparate impact discrimination is wrong—at least in part—because it reproduces and compounds the disadvantages created by past instances of directly discriminatory treatment [3, 30, 39, 40, 57]. Putting aside the possibility that some may use algorithms to hide their discriminatory intent—which would be an instance of direct discrimination—the main normative issue raised by these cases is that a facially neutral tool maintains or aggravates existing inequalities between socially salient groups.

This echoes the thought that indirect discrimination is secondary compared to directly discriminatory treatment. Under this view, it is not that indirect discrimination has less significant impacts on socially salient groups—the impact may in fact be worse than instances of directly discriminatory treatment—but direct discrimination is the “original sin” and indirect discrimination is temporally secondary. As Khaitan [35] succinctly puts it:

[indirect discrimination] is parasitic on the prior existence of direct discrimination, even though it may be equally or possibly even more condemnable morally. (…) [Direct] discrimination is the original sin, one that creates the systemic patterns that differentially allocate social, economic, and political power between social groups. These patterns then manifest themselves in further acts of direct and indirect discrimination. Indirect discrimination is ‘secondary’, in this sense, because it comes about because of, and after, widespread acts of direct discrimination.

Even though Khaitan is ultimately critical of this conceptualization of the wrongfulness of indirect discrimination, it is a potential contender to explain why algorithmic discrimination in the cases singled out by Barocas and Selbst is objectionable. Following this thought, algorithms which incorporate some biases through their data-mining procedures or the classifications they use would be wrongful when these biases disproportionately affect groups which were historically—and may still be—directly discriminated against. Notice that there are two distinct ideas behind this intuition: (1) indirect discrimination is wrong because it compounds or maintains disadvantages connected to past instances of direct discrimination and (2) some add that this is so because indirect discrimination is temporally secondary [39, 62].Footnote 11 In this paper, however, we argue that if the first idea captures something important about (some instances of) algorithmic discrimination, the second one should be rejected.

This idea that indirect discrimination is wrong because it maintains or aggravates disadvantages created by past instances of direct discrimination is largely present in the contemporary literature on algorithmic discrimination. For instance, Zimmermann and Lee-Stronach [67] argue that using observed correlations in large datasets to take public decisions or to distribute important goods and services such as employment opportunities is unjust if it does not include information about historical and existing group inequalities such as race, gender, class, disability, and sexuality. Otherwise, it will simply reproduce an unfair social status quo. Similarly, Rafanelli [52] argues that the use of algorithms facilitates institutional discrimination; i.e. instances of indirect discrimination that are unintentional and arise through the accumulated, though uncoordinated, effects of individual actions and decisions. The case of Amazon’s algorithm used to survey the CVs of potential applicants is a case in point. The algorithm reproduced sexist biases by observing patterns in how past applicants were hired.

Two things are worth underlining here. First, as mentioned, this discriminatory potential of algorithms, though significant, is not particularly novel with regard to the question of how to conceptualize discrimination from a normative perspective. It raises the questions of the threshold at which a disparate impact should be considered to be discriminatory, what it means to tolerate disparate impact if the rule or norm is both necessary and legitimate to reach a socially valuable goal, and how to inscribe the normative goal of protecting individuals and groups from disparate impact discrimination into law.Footnote 12 All these questions unfortunately lie beyond the scope of this paper. What we want to highlight here is that recognizing that compounding and reconducting social inequalities is central to explaining the circumstances under which algorithmic discrimination is wrongful. As argued below, this provides us with a general guideline informing how we should constrain the deployment of predictive algorithms in practice.

Second, however, this idea that indirect discrimination is temporally secondary to direct discrimination, though perhaps intuitively appealing, is under severe pressure when we consider instances of algorithmic discrimination. The idea that indirect discrimination is only wrongful because it replicates the harms of direct discrimination is explicitly criticized by some in the contemporary literature [20, 21, 35]. Although this temporal connection is true in many instances of indirect discrimination, in the next section, we argue that indirect discrimination – and algorithmic discrimination in particular – can be wrong for other reasons.

4.2 AI, discrimination and generalizations

Given what was argued in Sect. 3, the use of ML algorithms raises the question of whether it can lead to other types of discrimination which do not necessarily disadvantage historically marginalized groups or even socially salient groups.Footnote 13 To address this question, two points are worth underlining. First, the typical list of protected grounds (including race, national or ethnic origin, colour, religion, sex, age or mental or physical disability) is an open-ended list. This is necessary to be able to capture new cases of discriminatory treatment or impact. For instance, notice that the grounds picked out by the Canadian constitution (listed above) do not explicitly include sexual orientation. Yet, in practice, it is recognized that sexual orientation should be covered by anti-discrimination laws— i.e. Section 15 of the Canadian Constitution [34]. Accordingly, the fact that some groups are not currently included in the list of protected grounds or are not (yet) socially salient is not a principled reason to exclude them from our conception of discrimination.

This brings us to the second point. Algorithms can unjustifiably disadvantage groups that are not socially salient or historically marginalized. To illustrate, consider the following case: an algorithm is introduced to decide who should be promoted in company Y. The algorithm finds a correlation between being a “bad” employee and suffering from depression [9, 63]. Consequently, it discriminates against persons who are susceptible to suffer from depression based on different factors. Notice that this group is neither socially salient nor historically marginalized. Indeed, many people who belong to the group “susceptible to depression” most likely ignore that they are a part of this group. This highlights two problems: first it raises the question of the information that can be used to take a particular decision; in most cases, medical data should not be used to distribute social goods such as employment opportunities. Second, however, this case also highlights another problem associated with ML algorithms: we need to consider the underlying question of the conditions under which generalizations can be used to guide decision-making procedures. This second problem is especially important since this is an essential feature of ML algorithms: they function by matching observed correlations with particular cases. However, this very generalization is questionable: some types of generalizations seem to be legitimate ways to pursue valuable social goals but not others. As mentioned above, we can think of putting an age limit for commercial airline pilots to ensure the safety of passengers [54] or requiring an undergraduate degree to pursue graduate studies – since this is, presumably, a good (though imperfect) generalization to accept students who have acquired the specific knowledge and skill set necessary to pursue graduate studies [5]. However, refusing employment because a person is likely to suffer from depression is objectionable because one’s right to equal opportunities should not be denied on the basis of a probabilistic judgment about a particular health outcome.

In contemporary literature, three main contenders propose explanations of why generalizations can lead to wrongful discrimination: (1) Hellman proposes an expressive view stating that generalizations are wrong when they are demeaning or when they send the message that a person is of less value than another [; see also 2, 29, 31]; (2) Moreau contends that generalizations are wrongful when they subordinate socially salient groups [46]; and (3) Eidelson argues that generalizations are wrong because when someone generalizes about a certain person P, they can fail to treat P as an individual [23, 24].Footnote 14

Of the three proposals, Eidelson’s seems to be the more promising to capture what is wrongful about algorithmic classifications. Hellman’s expressivist account does not seem to be a good fit because it is puzzling how an observed pattern within a large dataset can be taken to express a particular judgment about the value of groups or persons. Moreover, this account struggles with the idea that discrimination can be wrongful even when it involves groups that are not socially salient. This problem is shared by Moreau’s approach: the problem with algorithmic discrimination seems to demand a broader understanding of the relevant groups since some may be unduly disadvantaged even if they are not members of socially salient groups. The very purpose of predictive algorithms is to put us in algorithmic groups or categories on the basis of the data we produce or share with others. Accordingly, the number of potential algorithmic groups is open-ended, and all users could potentially be discriminated against by being unjustifiably disadvantaged after being included in an algorithmic group.

Inputs from Eidelson’s position can be helpful here. For him, discrimination is wrongful because it fails to treat individuals as unique persons; in other words, he argues that anti-discrimination laws aim to ensure that all persons are equally respected as autonomous agents [24]. As he writes [24], in practice, this entails two things:

First, it means paying reasonable attention to relevant ways in which a person has exercised her autonomy, insofar as these are discernible from the outside, in making herself the person she is. Second, it means recognizing that, because she is an autonomous agent, she is capable of deciding how to act for herself. When we act in accordance with these requirements, we deal with people in a way that respects the role they can play and have played in shaping themselves, rather than treating them as determined by demographic categories or other matters of statistical fate.

This underlines that using generalizations to decide how to treat a particular person can constitute a failure to treat persons as separate (individuated) moral agents and can thus be at odds with moral individualism [53]. Generalizations are wrongful when they fail to properly take into account how persons can shape their own life in ways that are different from how others might do so. Public and private organizations which make ethically-laden decisions should effectively recognize that all have a capacity for self-authorship and moral agency.Footnote 15

Nonetheless, notice that this does not necessarily mean that all generalizations are wrongful: it depends on how they are used, where they stem from, and the context in which they are used. As Eidelson [24] writes on this point:

we can say with confidence that such discrimination is not disrespectful if it (1) is not coupled with unreasonable non-reliance on other information deriving from a person’s autonomous choices, (2) does not constitute a failure to recognize her as an autonomous agent capable of making such choices, (3) lacks an origin in disregard for her value as a person, and (4) reflects an appropriately diligent assessment given the relevant stakes.

Therefore, some generalizations can be acceptable if they are not grounded in disrespectful stereotypes about certain groups, if one gives proper weight to how the individual, as a moral agent, plays a role in shaping their own life, and if the generalization is justified by sufficiently robust reasons. Applied to the case of algorithmic discrimination, it entails that though it may be relevant to take certain correlations into account, we should also consider how a person shapes her own life because correlations do not tell us everything there is to know about an individual.

Yet, one may wonder if this approach is not overly broad. After all, generalizations may not only be wrong when they lead to discriminatory results. For instance, if we are all put into algorithmic categories, we could contend that it goes against our individuality, but that it does not amount to discrimination.Footnote 16 Eidelson’s own theory seems to struggle with this idea. As Boonin [11] has pointed out, other types of generalization may be wrong even if they are not discriminatory. He compares the behaviour of a racist, who treats black adults like children, with the behaviour of a paternalist who treats all adults like children. If we only consider generalization and disrespect, then both are disrespectful in the same way, though only the actions of the racist are discriminatory.

This is a central concern here because it raises the question of whether algorithmic “discrimination” is closer to the actions of the racist or the paternalist. If we worry only about generalizations, then we might be tempted to say that algorithmic generalizations may be wrong, but it would be a mistake to say that they are discriminatory.

However, we do not think that this would be the proper response. To say that algorithmic generalizations are always objectionable because they fail to treat persons as individuals is at odds with the conclusion that, in some cases, generalizations can be justified and legitimate. The point is that using generalizations is wrongfully discriminatory when they affect the rights of some groups or individuals disproportionately compared to others in an unjustified manner. The wrong of discrimination, in this case, is in the failure to reach a decision in a way that treats all the affected persons fairly. Hence, discrimination, and algorithmic discrimination in particular, involves a dual wrong. As Boonin [11] writes on this point:

there’s something distinctively wrong about discrimination because it violates a combination of (…) basic norms in a distinctive way. One of the basic norms might well be a norm about respect, a norm violated by both the racist and the paternalist, but another might be a norm about fairness, or equality, or impartiality, or justice, a norm that might also be violated by the racist but not violated by the paternalist.

This is, we believe, the wrong of algorithmic discrimination. The position is not that all generalizations are wrongfully discriminatory, but that algorithmic generalizations are wrongfully discriminatory when they fail the meet the justificatory threshold necessary to explain why it is legitimate to use a generalization in a particular situation.Footnote 17

How to precisely define this threshold is itself a notoriously difficult question. This threshold may be more or less demanding depending on what the rights affected by the decision are, as well as the social objective(s) pursued by the measure. To assess whether a particular measure is wrongfully discriminatory, it is necessary to proceed to a justification defence that considers the rights of all the implicated parties and the reasons justifying the infringement on individual rights (on this point, see also [19]). The justification defense aims to minimize interference with the rights of all implicated parties and to ensure that the interference is itself justified by sufficiently robust reasons; this means that the interference must be causally linked to the realization of socially valuable goods, and that the interference must be as minimal as possible. Hence, interference with individual rights based on generalizations is sometimes acceptable. For instance, given the fundamental importance of guaranteeing the safety of all passengers, it may be justified to impose an age limit on airline pilots—though this generalization would be unjustified if it were applied to most other jobs. Yet, to refuse a job to someone because she is likely to suffer from depression seems to overly interfere with her right to equal opportunities. This seems to amount to an unjustified generalization.

This points to two considerations about wrongful generalizations. First, there is the problem of being put in a category which guides decision-making in such a way that disregards how every person is unique because one assumes that this category exhausts what we ought to know about us. For instance, treating a person as someone at risk to recidivate during a parole hearing only based on the characteristics she shares with others is illegitimate because it fails to consider her as a unique agent.Footnote 18 Moreover, as argued above, this is likely to lead to (indirectly) discriminatory results. As mentioned, the factors used by the COMPAS system, for instance, tend to reinforce existing social inequalities.

This brings us to the second consideration. The problem is also that algorithms can unjustifiably use predictive categories to create certain disadvantages. That is, given that ML algorithms function by “learning” how certain variables predict a given outcome, they can capture variables which should not be taken into account or rely on problematic inferences to judge particular cases. For instance, these variables could either function as proxies for legally protected grounds, such as race or health status, or rely on dubious predictive inferences. In these cases, there is a failure to treat persons as equals because the predictive inference uses unjustifiable predictors to create a disadvantage for some. In many cases, the risk is that the generalizations—i.e., the predictive inferences used to judge a particular case—fail to meet the demands of the justification defense. To refuse a job to someone because they are at risk of depression is presumably unjustified unless one can show that this is directly related to a (very) socially valuable goal. Similarly, some Dutch insurance companies charged a higher premium to their customers if they lived in apartments containing certain combinations of letters and numbers (such as 4A and 20C) [25]. In this case, there is presumably an instance of discrimination because the generalization—the predictive inference that people living at certain home addresses are at higher risks—is used to impose a disadvantage on some in an unjustified manner.Footnote 19

As will be argued more in depth in the final section, this supports the conclusion that decisions with significant impacts on individual rights should not be taken solely by an AI system and that we should pay special attention to where predictive generalizations stem from. Moreover, notice how this autonomy-based approach is at odds with some of the typical conceptions of discrimination. First, though members of socially salient groups are likely to see their autonomy denied in many instances—notably through the use of proxies—this approach does not presume that discrimination is only concerned with disadvantages affecting historically marginalized or socially salient groups. Second, it follows from this first remark that algorithmic discrimination is not secondary in the sense that it would be wrongful only when it compounds the effects of direct, human discrimination. The very act of categorizing individuals and of treating this categorization as exhausting what we need to know about a person can lead to discriminatory results if it imposes an unjustified disadvantage. Consequently, tackling algorithmic discrimination demands to revisit our intuitive conception of what discrimination is.

4.3 Opacity and objectification

Thirdly, and finally, one could wonder if the use of algorithms is intrinsically wrong due to their opacity: the fact that ML decisions are largely inexplicable may make them inherently suspect in a democracy.Footnote 20 This point is defended by Strandburg [56]. As she argues, there is a deep problem associated with the use of opaque algorithms because no one, not even the person who designed the algorithm, may be in a position to explain how it reaches a particular conclusion.

For her, this runs counter to our most basic assumptions concerning democracy: to express respect for the moral status of others minimally entails to give them reasons explaining why we take certain decisions, especially when they affect a person’s rights [41, 43, 56]. The practice of reason giving is essential to ensure that persons are treated as citizens and not merely as objects. Importantly, this requirement holds for both public and (some) private decisions. As she writes [55]:

explaining the rationale behind decisionmaking criteria also comports with more general societal norms of fair and nonarbitrary treatment. Moreover, the public has an interest as citizens and individuals, both legally and ethically, in the fairness and reasonableness of private decisions that fundamentally affect people’s lives.

Accordingly, to subject people to opaque ML algorithms may be fundamentally unacceptable, at least when individual rights are affected.

Yet, even if this is ethically problematic, like for generalizations, it may be unclear how this is connected to the notion of discrimination. After all, as argued above, anti-discrimination law protects individuals from wrongful differential treatment and disparate impact [1]. If everyone is subjected to an unexplainable algorithm in the same way, it may be unjust and undemocratic, but it is not an issue of discrimination per se: treating everyone equally badly may be wrong, but it does not amount to discrimination. Nonetheless, the capacity to explain how a decision was reached is necessary to ensure that no wrongful discriminatory treatment has taken place. This explanation is essential to ensure that no protected grounds were used wrongfully in the decision-making process and that no objectionable, discriminatory generalization has taken place. Consequently, a right to an explanation is necessary from the perspective of anti-discrimination law because it is a prerequisite to protect persons and groups from wrongful discrimination [16, 41, 48, 56]. In the next section, we briefly consider what this right to an explanation means in practice.

5 Conclusion: three guidelines for regulating machine learning algorithms and their use

Given that ML algorithms are potentially harmful because they can compound and reproduce social inequalities, and that they rely on generalization disregarding individual autonomy, then their use should be strictly regulated. Yet, these potential problems do not necessarily entail that ML algorithms should never be used, at least from the perspective of anti-discrimination law. Rather, these points lead to the conclusion that their use should be carefully and strictly regulated. However, before identifying the principles which could guide regulation, it is important to highlight two things. First, the context and potential impact associated with the use of a particular algorithm should be considered. Anti-discrimination laws do not aim to protect from any instances of differential treatment or impact, but rather to protect and balance the rights of implicated parties when they conflict [18, 19]. Hence, using ML algorithms in situations where no rights are threatened would presumably be either acceptable or, at least, beyond the purview of anti-discriminatory regulations.

Second, one also needs to take into account how the algorithm is used and what place it occupies in the decision-making process. More precisely, it is clear from what was argued above that fully automated decisions, where a ML algorithm makes decisions with minimal or no human intervention in ethically high stakes situation—i.e., where individual rights are potentially threatened—are presumably illegitimate because they fail to treat individuals as separate and unique moral agents. To avoid objectionable generalization and to respect our democratic obligations towards each other, a human agent should make the final decision—in a meaningful way which goes beyond rubber-stamping—or a human agent should at least be in position to explain and justify the decision if a person affected by it asks for a revision. If this does not necessarily preclude the use of ML algorithms, it suggests that their use should be inscribed in a larger, human-centric, democratic process.

With these clarifications in place, we can identity three main guidelines which should be followed to properly restrict the use of ML algorithms to reduce the risk of, and hopefully avoid, wrongful discrimination:

(1) Machine learning algorithms with potentially significant impacts on individual rights and wellbeing should be vetted to mitigate the risk that they contain biases against historically disadvantaged and socially salient groups, and that they do not result in unjustified disparate impact. Algorithms should not reconduct past discrimination or compound historical marginalization.

This guideline could be implemented in a number of ways. For instance, it resonates with the growing calls for the implementation of certification procedures and labels for ML algorithms [61, 62]. Such labels could clearly highlight an algorithm’s purpose and limitations along with its accuracy and error rates to ensure that it is used properly and at an acceptable cost [64]. This guideline could also be used to demand post hoc analyses of (fully or partially) automated decisions. This would allow regulators to monitor the decisions and possibly to spot patterns of systemic discrimination. Requiring algorithmic audits, for instance, could be an effective way to tackle algorithmic indirect discrimination. They would allow regulators to review the provenance of the training data, the aggregate effects of the model on a given population and even to “impersonate new users and systematically test for biased outcomes” [16].

Beyond this first guideline, we can add the two following ones:

(2) Measures should be designed to ensure that the decision-making process does not use generalizations disregarding the separateness and autonomy of individuals in an unjustified manner. As a consequence, it is unlikely that decision processes affecting basic rights — including social and political ones — can be fully automated.

and.

(3) Protecting all from wrongful discrimination demands to meet a minimal threshold of explainability to publicly justify ethically-laden decisions taken by public or private authorities.

These final guidelines do not necessarily demand full AI transparency and explainability [16, 37]. Yet, different routes can be taken to try to make a decision by a ML algorithm interpretable [26, 56, 65].

First, “explainable AI” is a dynamic technoscientific line of inquiry. Many AI scientists are working on making algorithms more explainable and intelligible [41]. However, nothing currently guarantees that this endeavor will succeed. Alternatively, the explainability requirement can ground an obligation to create or maintain a reason-giving capacity so that affected individuals can obtain the reasons justifying the decisions which affect them. This can be grounded in social and institutional requirements going beyond pure techno-scientific solutions [41]. It is essential to ensure that procedures and protocols protecting individual rights are not displaced by the use of ML algorithms. An employer should always be able to explain and justify why a particular candidate was ultimately rejected, just like a judge should always be in a position to justify why bail or parole is granted or not (beyond simply stating “because the AI told us”). Algorithms may provide useful inputs, but they require the human competence to assess and validate these inputs. This is necessary to respond properly to the risk inherent in generalizations [24, 41] and to avoid wrongful discrimination. Therefore, the use of ML algorithms may be useful to gain in efficiency and accuracy in particular decision-making processes. However, gains in either efficiency or accuracy are never justified if their cost is increased discrimination.

Notes

In this paper, we focus on algorithms used in decision-making for two main reasons. First, the use of ML algorithms in decision-making procedures is widespread and promises to increase in the future. Second, as we discuss throughout, it raises urgent questions concerning discrimination. However, this does not mean that concerns for discrimination does not arise for other algorithms used in other types of socio-technical systems. For instance, we could imagine a computer vision algorithm used to diagnose melanoma that works much better for people who have paler skin tones or a chatbot used to help students do their homework, but which performs poorly when it interacts with children on the autism spectrum. Arguably, in both cases they could be considered discriminatory. For the purpose of this essay, however, we put these cases aside.

Here we are interested in the philosophical, normative definition of discrimination. For a general overview of how discrimination is used in legal systems, see [34].

As Lippert-Rasmussen writes: “A group is socially salient if perceived membership of it is important to the structure of social interactions across a wide range of social contexts” [39].

Direct discrimination should not be conflated with intentional discrimination. In other words, direct discrimination does not entail that there is a clear intent to discriminate on the part of a discriminator. Though instances of intentional discrimination are necessarily directly discriminatory, intent to discriminate is not a necessary element for direct discrimination to obtain. For instance, implicit biases can also arguably lead to direct discrimination [39]. What matters is the causal role that group membership plays in explaining disadvantageous differential treatment. If belonging to a certain group directly explains why a person is being discriminated against, then it is an instance of direct discrimination regardless of whether there is an actual intent to discriminate on the part of a discriminator.

In practice, it can be hard to distinguish clearly between the two variants of discrimination. For instance, it is perfectly possible for someone to intentionally discriminate against a particular social group but use indirect means to do so. The use of literacy tests during the Jim Crow era to prevent African Americans from voting, for example, was a way to use an indirect, “neutral” measure to hide a discriminatory intent. However, the distinction between direct and indirect discrimination remains relevant because it is possible for a neutral rule to have differential impact on a population without being grounded in any discriminatory intent.

This case is inspired, very roughly, by Griggs v. Duke Power [28].

We come back to the question of how to balance socially valuable goals and individual rights in Sect. 4.2 below. However, it may be relevant to flag here that it is generally recognized in democratic and liberal political theory that constitutionally protected individual rights are not absolute. They can be limited either to balance the rights of the implicated parties or to allow for the realization of a socially valuable goal. In the separation of powers, legislators have the mandate of crafting laws which promote the common good, whereas tribunals have the authority to evaluate their constitutionality, including their impacts on protected individual rights. In practice, different tests have been designed by tribunals to assess whether political decisions are justified even if they encroach upon fundamental rights. For instance, in Canada, the “Oakes Test” recognizes that constitutional rights are subjected to reasonable limits “as can be demonstrably justified in a free and democratic society” [51].

Of course, there exists other types of algorithms. However, here we focus on ML algorithms.

Of course, this raises thorny ethical and legal questions. For instance, it is doubtful that algorithms could presently be used to promote inclusion and diversity in this way because the use of sensitive information is strictly regulated. As Kleinberg et al. mention: “From the standpoint of current law, it is not clear that the algorithm can permissibly consider race, even if it ought to be authorized to do so; the [American] Supreme Court allows consideration of race only to promote diversity in education.” [37] Here, we do not deny that the inclusion of such data could be problematic, we simply highlight that its inclusion could in principle be used to combat discrimination. The question of if it should be used all things considered is a distinct one. Moreover, we discuss Kleinberg et al. proposals here to show that algorithms can theoretically contribute to combatting discrimination, but we remain agnostic about whether they can realistically be implemented in practice.

This is perhaps most clear in the work of Lippert-Rasmussen. For him, for there to be an instance of indirect discrimination, two conditions must obtain (among others): “it must be the case that (i) there has been, or presently exists, direct discrimination against the group being subjected to indirect discrimination and (ii) that the indirect discrimination is suitably related to these instances of direct discrimination” [39]. Other types of indirect group disadvantages may be unfair, but they would not be discriminatory for Lippert-Rasmussen. One advantage of this view is that it could explain why we ought to be concerned with only some specific instances of group disadvantage. However, as we argue below, this temporal explanation does not fit well with instances of algorithmic discrimination.

As mentioned above, here we are interested by the normative and philosophical dimensions of discrimination. Consequently, we have to put many questions of how to connect these philosophical considerations to legal norms aside. However, many legal challenges surround the notion of indirect discrimination and how to effectively protect people from it. These include the above mentioned necessary threshold to identify an instance of disparate impact, the question of how to identify the relevant group (and the relevant comparator group) to evaluate the disadvantage, what types of disadvantages are relevant, what the justifiability requirement entails in practice – i.e. in what circumstances is the “practical necessity” defense successful – or whether the discriminator can be held responsible for awarding damages in cases of indirect discrimination. For a general overview of these practical, legal challenges, see Khaitan [34].

A similar point is raised by Gerards and Borgesius [25]. They highlight that: “algorithms can generate new categories of people based on seemingly innocuous characteristics, such as web browser preference or apartment number, or more complicated categories combining many data points” [25]. From there, they argue that anti-discrimination laws should be designed to recognize that the grounds of discrimination are open-ended and not restricted to socially salient groups. However, they do not address the question of why discrimination is wrongful, which is our concern here.

Notice that Eidelson’s position is slightly broader than Moreau’s approach but can capture its intuitions. To fail to treat someone as an individual can be explained, in part, by wrongful generalizations supporting the social subordination of social groups. As such, Eidelson’s account can capture Moreau’s worry, but it is broader. As argued in this section, we can fail to treat someone as an individual without grounding such judgement in an identity shared by a given social group.

And it should be added that even if a particular individual lacks the capacity for moral agency, the principle of the equal moral worth of all human beings requires that she be treated as a separate individual.

We are extremely grateful to an anonymous reviewer for pointing this out.

It may be important to flag that here we also take our distance from Eidelson’s own definition of discrimination. Eidelson defines discrimination with two conditions: “(Differential Treatment Condition) X treat Y less favorably in respect of W than X treats some actual or counterfactual other, Z, in respect of W; and (Explanatory Condition) a difference in how X regards Y P-wise and how X regards or would regard Z P-wise figures in the explanation of this differential treatment.” [22] Notice that this only captures direct discrimination. Indeed, Eidelson is explicitly critical of the idea that indirect discrimination is discrimination properly so called. A full critical examination of this claim would take us too far from the main subject at hand. For an analysis, see [20].

This means that using only ML algorithms in parole hearing would be illegitimate simpliciter. That is, even if it is not discriminatory. Yet, a further issue arises when this categorization additionally reconducts an existing inequality between socially salient groups.

That is, to charge someone a higher premium because her apartment address contains 4A while her neighbour (4B) enjoys a lower premium does seem to be arbitrary and thus unjustifiable.

Interestingly, the question of explainability may not be raised in the same way in autocratic or hierarchical political regimes. Roughly, we can conjecture that if a political regime does not premise its legitimacy on democratic justification, other types of justificatory means may be employed, such as whether or not ML algorithms promote certain preidentified goals or values. This position seems to be adopted by Bell and Pei [10]. They argue that hierarchical societies are legitimate and use the example of China to argue that artificial intelligence will be useful to attain “higher communism” – the state where all machines take care of all menial labour, rendering humans free of using their time as they please – as long as the machines are properly subdued under our collective, human interests. However, it speaks volume that the discussion of how ML algorithms can be used to impose collective values on individuals and to develop surveillance apparatus is conspicuously absent from their discussion of AI. We thank an anonymous reviewer for pointing this out.

References

Altman, A. Discrimination. In Edward N. Zalta (eds) Stanford Encyclopedia of Philosophy, (2020). https://plato.stanford.edu/entries/discrimination/.

Anderson, E., Pildes, R.: Expressive Theories of Law: A General Restatement. Univ. Pensylvania Law Rev. 148(5), 1503–1576 (2000)

Arneson, R.: What is wrongful discrimination. San Diego Law Rev. 43(4), 775–806 (2006)

Alexander, L.: What makes wrongful discrimination wrong? Biases, preferences, stereotypes, and proxies. Univ. Pensylvania Law Rev. 141(149), 151–219 (1992)

Alexander, L. Is Wrongful Discrimination Really Wrong? San Diego Legal Studies Paper No. 17–257 (2016). https://doi.org/10.2139/ssrn.2909277

Baber, H.: Gender conscious. J. Appl. Philos. 18(1), 53–63 (2001)

Barocas, S., Selbst, A.D.: Big data’s disparate impact. Calif. Law Rev. 104(3), 671–732 (2016)

Barry-Jester, A., Casselman, B., and Goldstein, C. The New Science of Sentencing: Should Prison Sentences Be Based on Crimes That Haven't Been Committed Yet? The Marshall Project, August 4 (2015). Online: https://www.themarshallproject.org/2015/08/04/the-new-science-of-sentencing.

Bechmann, A. and G. C. Bowker. AI, discrimination and inequality in a 'post' classification era. ICA 2017, 25 May 2017, San Diego, United States, Conference abstract for conference (2017).

Bell, D., Pei, W.: Just hierarchy: why social hierarchies matter in China and the rest of the World. Princeton university press, Princeton (2022)

Boonin, D.: Review of Discrimination and Disrespect by B. Eidelson. Ethics. 128(1), 240–245 (2017).

Borgesius, F.: Discrimination, Artificial Intelligence, and Algorithmic Decision-Making. Strasbourg: Council of Europe - Directorate General of Democracy, Strasbourg. https://dare.uva.nl/search?identifier=7bdabff5-c1d9-484f-81f2-e469e03e2360. (2018). Accessed 11 Nov 2022.

Bozdag, E.: Bias in algorithmic filtering and personalization. Ethics Inf. Technol. 15, 209–227 (2013)

Burrell, J.: How the machine “thinks”: understanding opacity in machine learning algorithms. Big Data Soc. 3(1), 1–12 (2016)

Chapman, A., Grylls, P., Ugwudike, P., Gammack, D., and Ayling, J. 2022. A Data-driven analysis of the interplay between Criminological theory and predictive policing algorithms. In 2022 ACM Conference on Fairness, Accountability, and Transparency (FAccT ’22), June 21–24, 2022, Seoul, Republic of Korea. ACM, New York, NY, USA, 10 pages. https://doi.org/10.1145/3531146.3533071

Chesterman, S.: We, the robots: regulating artificial intelligence and the limits of the law. Cambridge university press, London, UK (2021)

Chun, W.: Discriminating data: correlation, neighborhoods, and the new politics of recognition. The MIT Press, Cambridge, MA and London, UK (2021)

Cohen, G.A.: On the currency of egalitarian justice. Ethics 99(4), 906–944 (1989)

Collins, H.: Justice for foxes: fundamental rights and justification of indirect discrimination. In: Collins, H., Khaitan, T. (eds.) Foundations of indirect discrimination law, pp. 249–278. Hart, Oxford, UK (2018)

Cossette-Lefebvre, H.: Direct and Indirect Discrimination: A Defense of the Disparate Impact Model. Public Affairs Quarterly 34(4), 340–367 (2020)

Doyle, O.: Direct discrimination, indirect discrimination and autonomy. Oxf. J. Leg. Stud. 27(3), 537–553 (2007)

Ehrenfreund, M. The machines that could rid courtrooms of racism. The Washington Post (2016). Online: https://www.washingtonpost.com/news/wonk/wp/2016/08/18/why-a-computer-program-that-judges-rely-on-around-the-country-was-accused-of-racism/.

Eidelson, B.: Treating people as individuals. In: Hellman, D., Moreau, S. (eds.) Philosophical foundations of discrimination law, pp. 203–227. Oxford university press, Oxford, UK (2013)

Eidelson, B.: Discrimination and disrespect. Oxford university press, Oxford, UK (2015)

Gerards, J., Borgesius, F.Z.: Protected grounds and the system of non-discrimination law in the context of algorithmic decision-making and artificial intelligence. Colo. Technol. Law J. 20, 1–56 (2022)

Günther, M., Kasirzadeh, A.: Algorithmic and human decision making: for a double standard of transparency. AI Soc. 37, 375–381 (2022)

Graaf, M. M., and Malle, B. (2017). How people explain action (and Autonomous Intelligent Systems Should Too). AAAI Fall Symposia. https://www.seman ticsc holar.org/ paper/ How- People- Expla in-Action- (and- Auton omous- Syste ms- Graaf- Malle/ 22da5 f6f70 be46c 8fbf2 33c51 c9571 f5985 b69ab

Griggs v. Duke Power Co., 401 U.S. 424. United States Supreme Court. https://supreme.justia.com/cases/federal/us/401/424/. (1971). Accessed 11 Nov 2022

Hellman, D.: When is discrimination wrong? Cambridge. Harvard University Press, MA (2008)

Hellman, D.: Indirect discrimination and the duty to avoid compounding injustice. In: Collins, H., Khaitan, T. (eds.) foundations of indirect discrimination law, pp. 105–122. Hart Publishing, Oxford, UK and Portland, OR (2018)