Formative assessment in higher education: an exploratory study within programs for professionals in education

- 1University of Genoa, Genoa, Italy

- 2St. Thomas University, Fredericton, NB, Canada

This study explores how prospective professionals in higher education can learn about and apply formative assessment methods relevant to their future educational workplaces. In the academic year 2022–23, 156 pre-service teachers, social workers, and heads of social services took part in a three-stage mixed-method study on university learning experiences involving formative assessment practices. They were exposed to self-, peer-, and group-assessment strategies. Data collected after each stage revealed participants’ perspectives on each method. Findings show that students who engaged in formative assessment comprehended assessment complexity and were motivated to use diverse assessment forms. Formative assessment proves effective for both evaluation and development, supporting higher education students in honing assessment competencies for future professional roles in educational and social sectors.

1 Introduction and rationale

The growing emphasis on formative assessment represents a challenge for universities, schools, and educational institutions within traditionally summative assessment cultures. In 2005, the Organization for Economic Cooperation and Development (OECD) declared that schools could support academic development through formative assessment, mainly for underachieving students (OECD, 2005). In addition, the OECD (2005) underscored that formative assessment improves the retention of learning and the quality of students’ work. Additionally, the United Nations Educational, Scientific and Cultural Organization (UNESCO) emphasized how formative assessment can help students’ learning during emergencies and crises (Bawane and Sharma, 2020). A recent study commissioned by the European Commission underscored that “formative assessment needs to place the learners themselves at the center of the learning and assessment processes, taking a more active and central role both as individual, self-regulated learners, and as critical peers” (Cefai et al., 2021, p. 7). These studies indicate the potential of formative assessment strategies to create and build meaningful learning environments in a variety of educational contexts.

In Europe, there has been discussion of the correspondence between the 1999 implementation of the Bologna Process and the increase in learner-centered assessment approaches in educational systems that typically employ top-down, test-based methods (Pereira et al., 2015). Founded on a skills-based learning model, the European Higher Education Area (EHEA) currently guides the operations of European universities, prompting the adoption of new methodologies and assessment systems. Systematic reviews of formative assessment reveal that despite evidence that learner-centred formative assessment practices are effective, such practices have been researched predominantly in school-based contexts, unevenly implemented in European higher education, and dominated by studies from the United Kingdom (Pereira et al., 2015; Morris et al., 2021). Moreover, a recent study of Italian higher education assessment practices underscores a culture of assessment that places emphasis on the summative or concluding phase of teaching rather than developmental supports for ongoing learning through formative assessment (Doria et al., 2023). In this study, we answer calls for broader empirical work on formative assessment in higher education; specifically, we focus our attention on the implementation of embedded formative assessment within programs that prepare university students to work as pre-service teachers, social workers and heads of social services in Italy.

This study addresses a gap in the research on how formative assessment can be used to develop and evaluate students who are preparing for professional contexts where formative assessment knowledge is both a beneficial and expected skill. This goal is achieved through the following specific research questions: can formative assessment help students to achieve deep learning of several assessment methods and strategies, support students to understand the complexity of assessment procedures, promote students’ growth from both a personal and professional point of view, and motivate them to use multiple forms of assessment when they become professionals in their respective fields?

The next section provides an overview of how conceptual understandings from the literature on formative assessment provide a rationale for the research and inform the study design. Drawing on existing literature on formative assessment in higher education contexts, we explores how such concepts and practices might be used to explore how to foster pre-service teachers, social workers, and heads of social services understanding of formative assessment. Thereafter, the study aims are explored through qualitative and quantitative data that support and sustain reasonable and realistic ways to introduce formative assessment strategies and activities in higher education contexts (Crossouard and Pryor, 2012). Finally, the limitations and difficulties of combining and effectively balancing summative and formative assessment methods (Lui and Andrade, 2022) are discussed, and recommendations for future practice are made.

2 Theoretical framework and literature review

This research derives from a theoretical understanding of learning as an ongoing and developmental process that is effective when the learner is engaged in and metacognitive about the assessment process (Bruner, 1970). Like Crossouard and Pryor (2012), we resist the separation of theory from practice and consider them entangled. This framework underpins both the concepts explored in the next sections of this paper, and how these concepts have influenced the design, analysis and reporting of the study.

When exploring the potential of formative assessment in a higher education context that is largely summative, we considered three main areas of literature to support and situate the work: formative assessment as a developmental process in education, the potential of formative assessment within programs for professionals in education, and the principles of formative assessment that could inform the study design. Throughout this section we demonstrate how, in alignment with the learner-centred nature of formative assessment, this study aims to create and investigate the conditions for building higher education learning environments where students can experience several assessment types and can reflect on and improve their own learning processes and competence development (Dann, 2014; Ibarra-Sáiz et al., 2020). We also establish how the student profile that inspires us to conduct this study is represented by learners who are ultimately able to monitor, self-reflect, modify their learning strategies according to different situations, and seek multiple creative solutions suitable for their future professional tasks (Tillema, 2010; Evans et al., 2013; Ozan and Kıncal, 2018).

2.1 Formative assessment as a developmental process for higher education

Educational theorist Jerome Bruner (1970) stated that “learning depends on knowledge of results, at a time when, and at a place where, the knowledge can be used for correction” (p. 120), indicating that learning processes are not only aimed at remembering content and information but also involve modification and understanding the best ways to learn. Higgins et al. (2010) defined formative assessment as a task to be carried out while students are learning so that they can have several forms of feedback aimed at improving their own learning, whether marked or not. From this basis, and like Petty (2004), we assert that the main goal of formative assessment is developmental. Essentially, formative assessment is intended to assist students in diagnosing and monitoring their own progress by identifying their strengths and weaknesses and spending their efforts on trying to improve their learning processes (Petty, 2004, p. 463). To summarize, the key concept of formative assessment is represented by the fact that the reflexivity of students is not static but it can be enhanced or further developed at any time (Hadrill, 1995).

To be effective in higher education contexts, formative assessment requires certain characteristics. First of all, formative assessment should be continuous (Brown, 1999). An episodic and occasional activity with formative assessment cannot allow students a meaningful opportunity to self-reflect on their own learning. Instead, “regular formative assessment can be motivational, as continuous feedback is integral to the learning experience, stimulating and challenging students” (Leach et al., 1998, p. 204). The effectiveness of formative assessment in higher education contexts is well described by Yorke (2003) when the author specifies that, through formative assessment practices, students have the opportunity to understand the meaning of formative comments made either by the teachers or their peers. Additionally, students can further modify their learning approaches based on their own understandings.

2.2 Formative assessment within programs for professionals working in educational contexts

A key question regarding the use of formative assessment within programs for professionals in educational contexts relates to how understanding informs future practice. If students have the opportunity to experience formative assessment strategies while studying at university, will they be more likely to practice formative assessment when they are teachers or social workers? This inquiry was made by Hamodi et al. (2017), who identified a need for greater understanding in this under-researched area. They found that formative assessment within programs for professionals in educational contexts represents a three-fold opportunity. Firstly, formative assessment can be used as a strategy to improve all students’ learning processes during their university programs. Secondly, students in initial teacher education programs should learn and practice several formative assessment strategies because they will have to create many educational opportunities in their future practice. Lastly, students should be encouraged to use formative assessment when they become professionals in their respective working fields, inside and outside school. While the first opportunity is beneficial for all university students, the last two are particularly evident for the educational professionals who are the participants of our study: pre-service teachers, social workers, and heads of social services.

Formative assessment has cognitive and metacognitive benefits for all university students, and for prospective teachers, social workers and heads of social services, there are additional professional benefits, including the ability to implement formative assessment in educational contexts (Kealey, 2010; Montgomery et al., 2023). Specifically, formative assessment represents a vital strategy for professionals working in educational settings because it provides several feedback opportunities to develop self-regulated learning (Clark, 2012; Xiao and Yang, 2019).

Feedback and self-regulation are two important dimensions of formative assessment. Regarding the first dimension, feedback, Jensen et al. (2023) emphasized that feedback should be directed toward the development of instrumental and substantive learning goals. Instrumental feedback relates to whether the work has accomplished the task criteria, while substantive learning feedback, which is less common, directs students to “reflect critically on their own assumptions and leads to a new level of understanding or quality of performance” (p. 7). Furthermore, feedback should be based on comments that stimulate reflection (Dekker et al., 2013) with the following characteristics. According to Suhoyo et al. (2017), the comments should be: strength (what students did well); weakness (aspects which need improvement); comparison to standard (similarities and differences with the requested task); correct performance (whether students’ performance approximates optimal performance); action plan (indicate future improvements). Ultimately, feedback should be timely, specific, actionable, respectful, and non-judgmental (Watson and Kenny, 2014).

Regarding self-regulation, formative assessment is aimed at setting up activities to allow students to react to feedback so as to enrich and increase the final outcomes (Ng, 2016). In other words, Pintrich and Zusho (2002) suggested that self-regulation is based on an active learning process in which learners are able to monitor and regulate their own cognition (p. 64). To do so, Nicol and Macfarlane-Dick (2006) indicated seven main principles for feedback that facilitates positive self-regulation: clarifying what good performance is (goals, criteria, expected standards); facilitating the development of self-assessment (reflection) in learning; delivering high quality information to students about their learning; encouraging teacher and peer dialogue around learning; encouraging positive motivational beliefs and self-esteem; providing opportunities to close the gap between current and desired performance; providing information to teachers that can be used to help shape teaching (p. 205).

While summative assessment is necessary to indicate the level of student performance, it remains an extrinsic assessment that does not foster students’ deep reflection on their own learning progressions (Ismail et al., 2022). Formative assessment represents an opportunity for prospective workers in education to deeply reflect on both their personal and professional development through cognitive and metacognitive benefits and feedback that facilitates positive self-regulation.

2.3 Formative assessment strategies: self-, peer-, and group-assessment

From the literature, we identified three main formative assessments strategies: self-, peer-, and group-assessment to be incorporated into our study. Panadero et al. (2015) defined self-assessment as an activity through which students can explain and underline the “qualities of their own learning processes and products” (p. 804). As specified by Andrade (2019), it is always important to clarify the purpose of formative assessment: in this study, self-assessment was not aimed at giving a grade. The activity was intended to support students’ reflection on their learning processes, to improve student learning and, in particular, develop students’ capacities for giving feedback to themselves or others, as described by Wanner and Palmer (2018).

Regarding peer-assessment, Biesma et al. (2019) specified that peer activities can allow students to analyze the quality of a task completed by other students highlighting the quality of their learning processes and their professional development. Similarly, van Gennip et al. (2010) defined peer assessment as a learning intervention, consequently, peer assessment can be considered as a supplemental strategy in the development of assessment as learning. Moreover, Yin et al. (2022) specified that peer-assessment “is not merely about transferring information from a knowledgeable person to a rookie learner, but an active process where learners are engaged with continuous assessment of knowledge needs and learn to re-construct relevant cognitive understanding in context” (p. 2).

The third main formative assessment strategy is a particular form of peer-assessment carried out in groups. In this case, feedback is provided by a group of students for developmental purposes (Baker, 2007). This form of peer-assessment is particularly useful for professionals in education when they have to present, argue and discuss with peers the description and the design of educational activities (Homayouni, 2022). Essentially, group-assessment is a form of “co-operative or peer-assisted learning that encourages individual students in small groups to coach each other in turn so that the outcome of the process is a more rounded understanding and a more skillful execution of the task in hand than if the student was learning in isolation” (Asghar, 2010, p. 403). For all formative assessment strategies, a rubric designed by the teacher to lead the students’ reflection and assessment is fundamental (Andrade and Boulay, 2003).

Morris et al. (2021) highlighted the evidence on university students’ academic performance when instructors use formative assessment strategies on a regular basis. These authors identified the four main points of an effective formative assessment strategy: (a) content, detail and delivery, (b) timing and spacing, (c) peers, and (d) technology. They found that formative activities can support positive educational experiences and enhance student learning and development.

2.4 Critical views of formative assessment in higher education contexts

In addition to the above aspects of formative assessment, it is important to consider criticisms related to the use of formative assessment strategies in higher education and how they influence this work. For instance, Brown (2019) posed the question: is formative assessment really assessment? According to this author, formative assessment has some aspects of assessment but it cannot be considered assessment. A series of reasons included these points: feedback occurs in ephemeral contexts; it is not possible to recognize the interpretations of teachers and, additionally, it is not possible to understand if those interpretations are adequately accurate; ultimately, stakeholders cannot know if the conclusions are valid or not.

Hamodi et al. (2017) emphasized that formative assessment strategies risk conflicts between students when aimed at giving or affecting grades. However, students also “recognize that the formative assessment they experienced as university students has proved valuable in their professional practice in schools” (p. 186). The social difficulties that can arise from formative assessment is a main concern (Biesma et al., 2019). Additionally, both Koka et al. (2017) and Bond et al. (2020) stressed that formative assessment is not effective if not used regularly and Crossouard and Pryor (2012) emphasized that unexamined theories related to formative assessment can be implicit in practice and potentially narrow the educational possibilities of an intervention. Similarly, Morris et al. (2021), following Yorke (2003), question the most effective assessment approaches for higher education students, because there is a lack of clarity across higher education.

Ultimately, the critical views of formative assessment are focused on the following questions: is it necessary to link formative assessment with a grading scale? In what ways should the informal interactions raised during the formative assessment activities be connected with instructors’ formal grading? Is there evidence regarding the effectiveness of formative assessment strategies? How might university students be motivated to use formative assessment in their future workplace environments? Lastly, how can summative and formative strategies be effectively integrated throughout the learning activities? Situated within these theories, concepts, critiques, and questions, this study aims to provide views and clarity from the Italian context.

3 Context of the study and research questions

3.1 Context

Before presenting the aims and research questions, it is useful to describe Italian university programs for pre-service teachers, social workers, and heads of social services. Pre-service teachers must have a 5-year degree to teach either kindergarten (pupils aged 3–5) or the first five grades of primary school (pupils aged 6–10). Social workers must have a 3-year bachelor degree in educational sciences with a focus in one of two main programs. The first program trains professionals to work in educational contexts outside schools: educational services for minors with family difficulties; educational services for migrants; counselling centers; educational centers for unaccompanied foreign minors; anti-violence centers; centers for minors who committed crimes; etc. The second program is dedicated to social workers who will be employed as early childhood educators for pupils aged 0 to 3 in kindergarten. It is important to note that these professionals are called educators for early childhood services, but do not hold teacher status. Lastly, heads of social services must have a 2-year masters degree in educational sciences. These students go on to work in two main fields: as designers of local/national/international educational projects carried out by private and public bodies/centers; or, as coordinators of private and public social and educational services focused on different sectors such as early childhood, minors, migrants, etc. As indicated earlier, the Italian context is particularly suitable for this study since it has a higher education system based mainly on summative assessment. Further, given the learner-centred nature of formative assessment, it also conceptually and methodologically fitting to solicit the voices of and feedback comments made by the student participants regarding the effectiveness of formative strategies in this predominantly summative assessment culture.

3.2 Aims and research questions

This exploratory study aimed to investigate the role of formative assessment strategies carried out in university programs for pre-service teachers, social workers, and heads of social services. The overall aim of this study was to explore the benefits and the limitations related to the use of formative assessment methods in the higher education contexts. Specifically, the study aimed to examine the characteristics and practices of self-, peer- and group-assessment. Consequently, the overall research question can be expressed as follows: to what extent can formative assessment methods help higher education students reflect on their assessment competences as future professionals in educational and social fields? Further, we identified specific research questions: did the use of formative assessment.

(RQ #1) help students to achieve deep learning of several assessment methods and strategies?

(RQ #2) support students to understand the complexity of assessment procedures?

(RQ #3) promote the students’ growth from both a personal and professional point of view?

(RQ #4) motivate the students to use multiple forms of assessment when they become professionals in their respective fields?

In addition, we specified two supplementary research questions: (RQ #5) were there differences and/or specificities in the use of formative assessment in the programs for pre-service teachers, social workers, and heads of social services? (RQ #6) were there differences, in terms of effectiveness, between the formative assessment methods: self-, peer- and group-assessment?

4 Research design

To answer the research questions, a mixed method research design was chosen. Specifically, we followed the indications by Tashakkori and Teddlie (2009), adopting a mixed method multistrand design since the exploratory study provided several stages. Additionally, as stated by Creswell and Clark (2011), the timing of data collection was concurrent since we implemented both the quantitative and qualitative strands during each phase of the study. The interpretation of the results was based on a triangulation of quantitative and qualitative data (Creswell et al., 2003) since there were no specific priorities between the kinds of data. Both data typologies were utilized to gain a deep understanding of the phenomenon. We have chosen a sequential design because it allowed us to implement the different formative assessment strategies with all students at the same time. In this way, the students could express their ideas on the effectiveness of each strategy. Conceptually this methodological approach serves to amplify the points from the theoretical framework: we sought student-centred data that was sequential, over time, with multiple access points for understanding to gain a deeper understanding of this student-centred assessment approach for higher education.

4.1 Participants, procedure, and instruments

4.1.1 Participants

As explained previously, we involved four main groups of students in the study: pre-service teachers, social workers, early childhood educators, and heads of social services. Each of these groups had specific courses included in their programs during the academic year 2022/23. Pre-service teachers (including kindergarten teachers 3-6/Primary teachers) were divided into two sub-groups. The first one had a course named “Curriculum development” scheduled at the second year of the five-year teacher education program. The second course was called “Play as educational strategy” scheduled in the third year of the same program. The social workers also took the “Curriculum development” course, but in the first year of their program. The early childhood educators had the course “Play as educational strategy” scheduled in the second year of their program. Lastly, the heads of social services had a course called “Designing and assessing learning environments” scheduled in the first year of the masters degree. All the courses contained common content and topics (learning environments, educational and assessment strategies, etc.) in addition to themes and issues relevant to the specific professional development of the students’ programs.

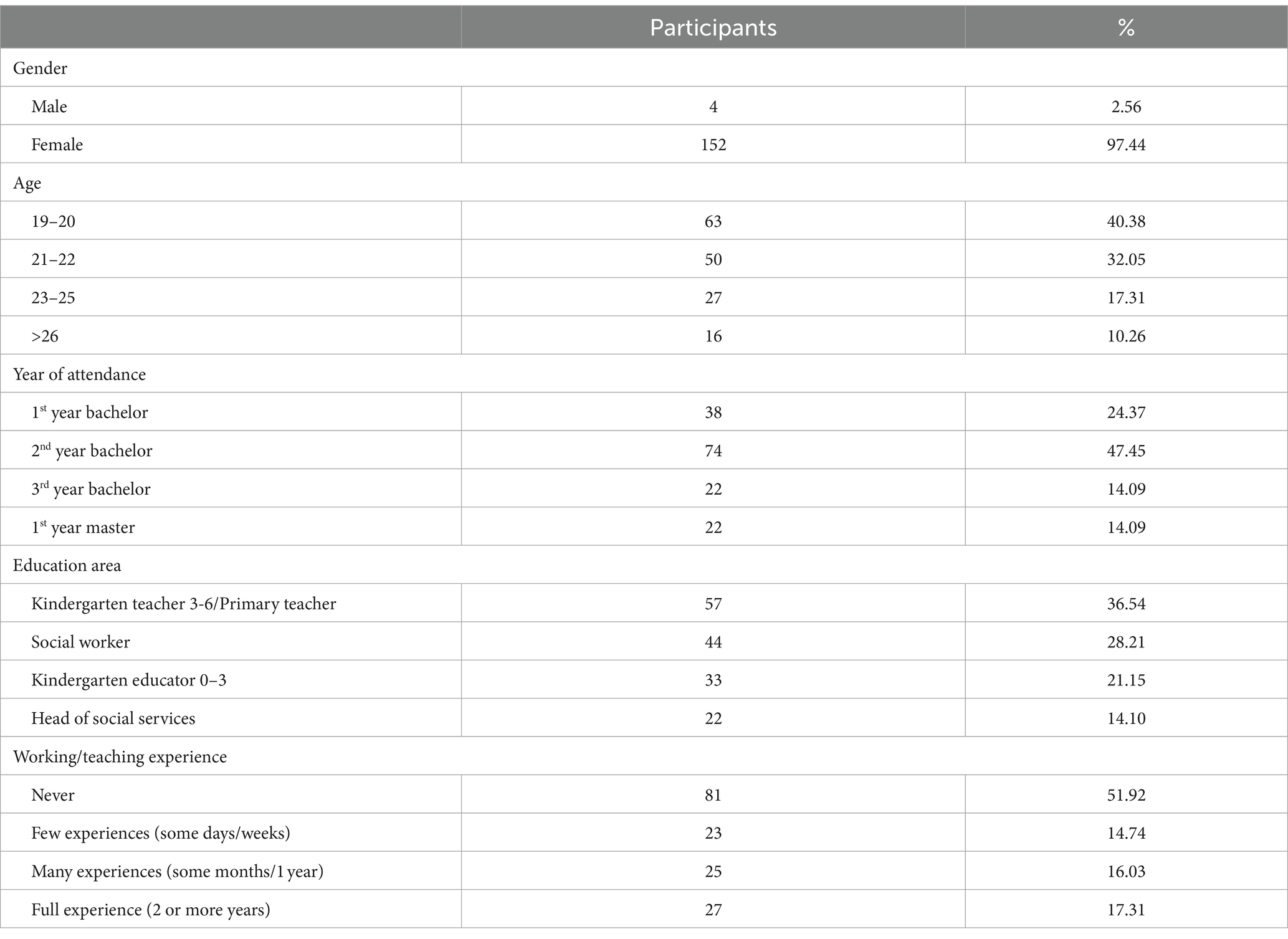

Table 1 indicates the demographic and educational characteristics of participants. Almost all participants are female between 19 and 22 years of age. In fact, 70% of participants were in the first two years of the bachelor degree. The groups of students, divided according to prospective job, were mainly pre-service teachers (36.54%), followed by social workers (28.21%) and early childhood educators (21.15%). More than half participants had no prior teaching or work experiences before the study. Around 30% of participants already had some teaching or work experiences and 17.31% of participants were experienced teachers or social workers. All students were invited to take part in the study, and out of 106 pre-service teachers in the courses 57 of them (53.76%) accepted to be involved. Similarly, 44 out of 105 social workers (41.91%); 33 out of 81 early childhood educators (40.74%); and 22 out of 35 heads of social services (62.86%) participated in the study.

The principles of research ethics were strictly followed. All students enrolled in the different programs were informed about the aims, activities and procedures for the study. Participation was optional, and those who agreed to participate gave written informed consent.

4.1.2 Procedure

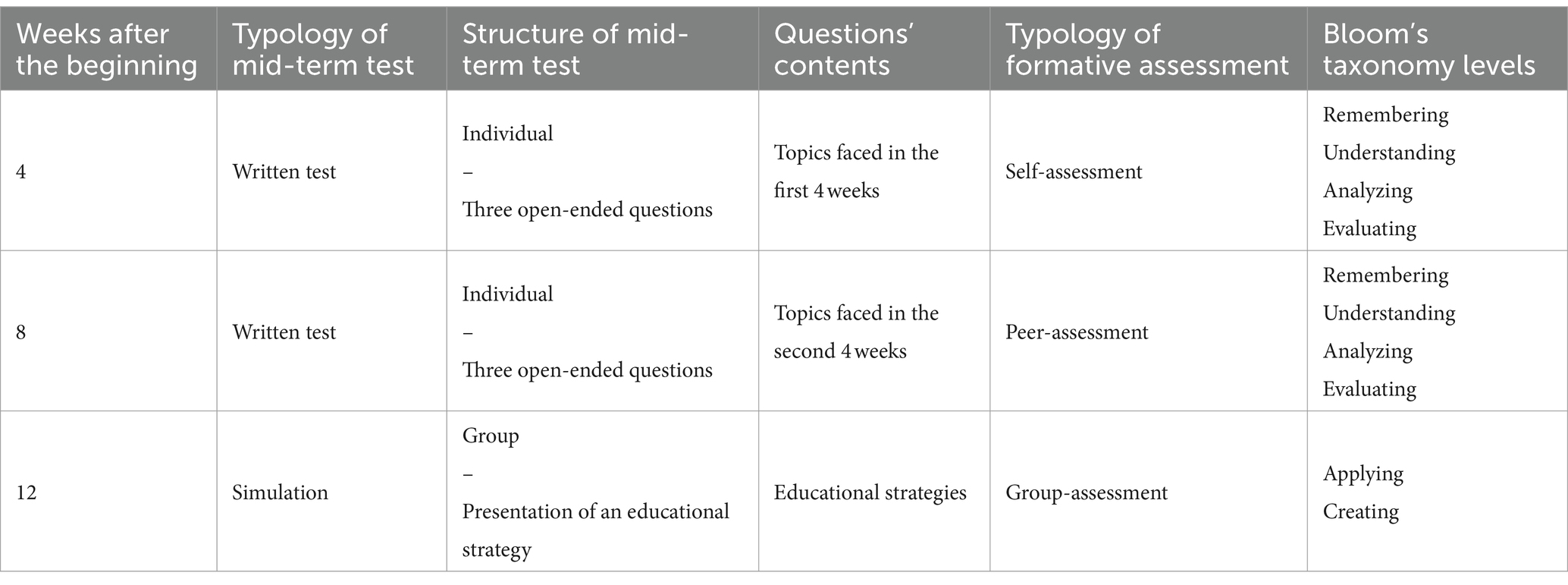

The procedure was split into three main phases carried out throughout the academic year 2022/23. The first phase consisted of a self-assessment activity. After a mid-term written test, composed of open-ended questions and assigned four weeks from the beginning of the course, the students had to self-assess their own exam performance following a rubric designed by the instructor. The second phase consisted of a peer-assessment activity. After another mid-term written test, similar to the first, assigned after eight weeks from the beginning of the course, the students had to assess the answers written by another student following a rubric designed by the instructor.

Table 2 summarizes the procedure, specifying that the first and the second mid-term written tests were taken by each student individually and composed of three open-ended questions focused on the topics covered by the instructor in the respective period. For instance, the first test included the concepts of learning environment and educational space. The second test contained the idea of competence, and strategies to design and assess an educational path. Each question required the students to develop two main aspects: content and argumentation. Students had to write responses that both presented topic-related content concerning the topics and that argued ideas and connections with a high-level of coherence. The first two phases involved the following levels of Bloom’s taxonomy: remembering, understanding, analyzing, and evaluating.

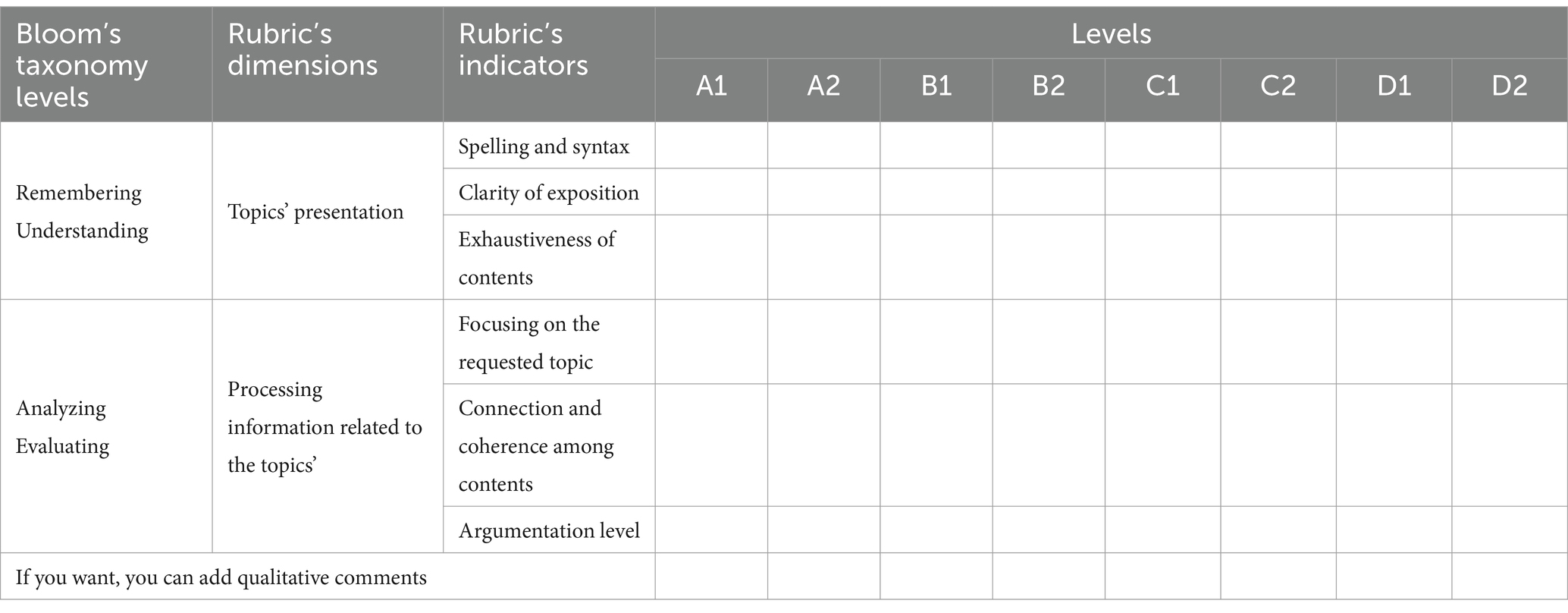

After the first mid-term test, the students had to reflect on their own answers, following the rubric in Table 3. They had to self-assess their three answers, so they completed the rubric three times. The levels followed the levels used in the Italian educational system: from A (advanced level) to D (beginning level). The sub-division into two sub-levels was necessary to give more opportunities to assess the nuances of their own learning. In addition, the students could add qualitative comments. Similarly, after the second mid-term test, the students had to peer-assess the answers of a classmate, using the same rubric. The peer-assessment was random and blinded, so students could not identify their peer-reviewer.

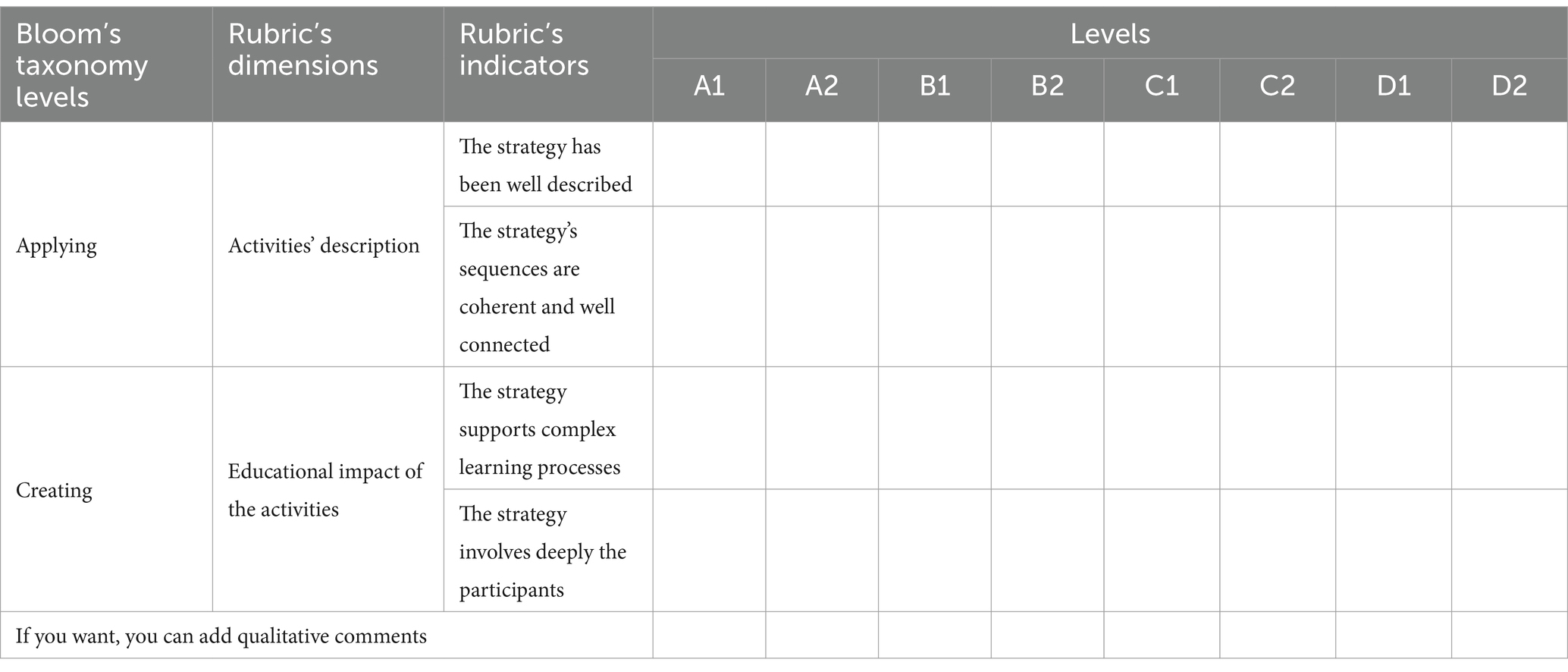

The third phase consisted of a group-assessment activity. In the last mid-term test, the students had to design an educational action plan based on a strategy such as cooperative learning, problem-based learning, case study, etc. The strategy was randomly assigned to the students by the instructor the day before the test. During the test, the students had to present their action plan to a group of peers (each group was composed of 4–5 students). After the presentation, the group students had to assess the presentation following the rubric shown in Table 4.

4.1.3 Instrument

The research procedure involved three observational moments. After each mid-term test, the students were asked to fill in an online questionnaire focused on the research questions. The questionnaire was composed of three sections. The first section contained the demographic and educational characteristics of participants (see Table 1). The second included three scales dedicated to each typology of formative assessment (self-, peer-, and group-). Each scale was composed of five items aimed to underscore the participants’ ideas regarding the different techniques of formative assessment. The items were linked to the following factors: this formative assessment activity (self, peer, or group) supported students’ development in: (a) learning assessment strategies (Learning); (b) understanding complexity of assessment procedures (Complexity); (c) growing from a personal point of view since I’m more aware of my capacities and limitations (Personal); (d) growing from a professional point of view since I’m more aware of my competences as teacher/social worker (Professional); (e) increasing my motivation to use formative assessment strategies in the future (Motivation).

These items were rated by the students with a four-point Likert scale, from 4 (Yes, this formative assessment activity has been very useful/effective) to 1 (No, this formative assessment activity has not been useful/effective at all). In addition, in the third section, they were able to add free qualitative comments and, ultimately, they were asked to suggest whether the instructor should use this strategy again the following year with new students.

4.2 The qualitative–quantitative data analysis procedure

The data analyses were performed both from a qualitative and quantitative point of view. The qualitative data were coded with NVivo 14 following the three steps suggested by grounded theory: open coding, axial coding and selective coding (Charmaz, 2014; Corbin and Strauss, 2015; De Smet et al., 2019). The quantitative data were analyzed with SPSS 29 and focused on reliability analyses, ANOVA for repeated measures, the Friedman test, Exploratory Factor Analysis (EFA), and non-parametric tests to highlight potential statistically significant differences between the demographic and educational characteristics of the participants, considering gender, age, year of attendance, education area, working/teaching experience as variables.

5 Data analysis and findings

The data analysis is structured as follows. On the basis of the study aims indicated in the introduction, both quantitative and qualitative findings will underline, firstly, which formative assessment activities are more suitable in the higher education contexts. So, the findings will highlight the favorite techniques by the students: self-, peer-, and/or group-assessment. Secondly, the findings will emphasize which factors (Learning, Complexity, Personal, Professional, Motivation), linked with the formative assessment, have been more developed. So, the findings can tell us in which way formative assessment can become a basic and crucial concept for the students. Finally, the findings will stress if the use of formative assessment supports the students’ motivation to use formative assessment in their own future professional fields.

5.1 Quantitative findings

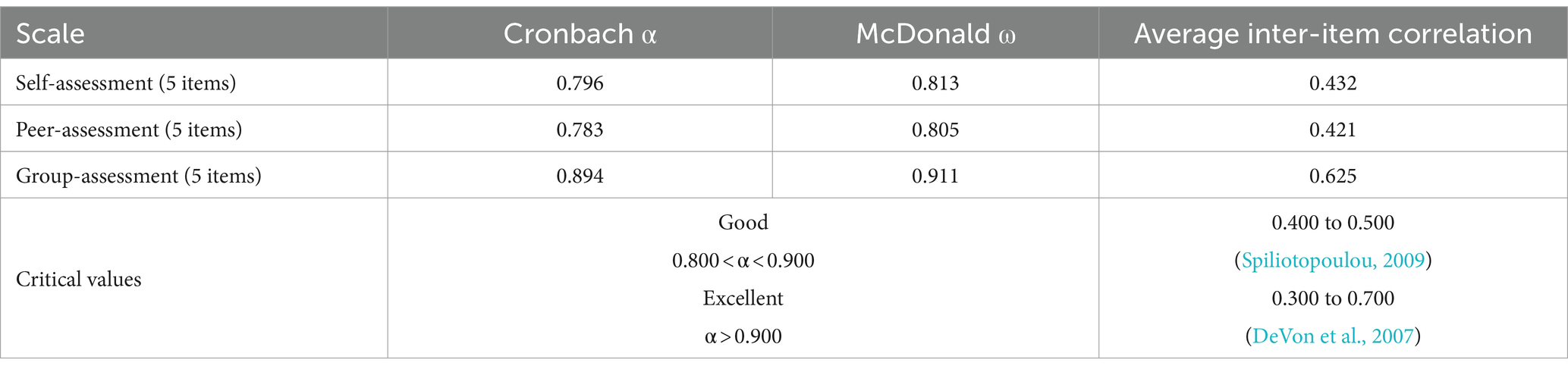

The first quantitative data analysis was focused on the instrument’s reliability, so we used the following coefficients: Cronbach’s Alpha (α); McDonald’s Omega (ω); and average inter-item correlation. Table 5 summarizes the results.

Then, the quantitative analysis concentrated on the potential differences, in terms of effectiveness, between the formative assessment methods: self-, peer- and group-assessment. The Friedman test revealed that there is a statistically significant difference among these three methods (χ2 = 9.726 df = 2 p < 0.008). Specifically, the Conover’s post hoc comparisons showed that there are not differences between peer- and group-assessment (t = 0.174 p < 0.862) but there are significant differences between self- and peer-assessment (t = 2.786 p < 0.005) and between self- and group-assessment (t = 2.612 p < 0.009). The results were specifically higher for peer- and group-assessment.

Analyzing each scale, we found significant differences between the items related to the research questions. Particularly, within the scales regarding the self- and the peer-assessment methods, we found that the scores for the factors named “Complexity” and “Motivation” were higher than the others.

Similarly, analyzing the potential differences related to each factor between the scales, we found that the scores for the factor “Complexity” were higher (χ2 = 11.647 df = 2 p < 0.003) in the self- (t = 2.054 p < 0.041) and peer-assessment (t = 3.407 p < 0.001) compared to those in the group-assessment. In addition, the scores for the factor “Professional” are higher (χ2 = 14.630 df = 2 p < 0.001) in the peer- (t = 2.692 p < 0.007) and group-assessment (t = 3.718 p < 0.001) compared to those in the self-assessment.

Ultimately, analyzing which are the factors with the highest scores across the scales, we found that “Complexity” and “Motivation” were more appreciated by the participants compared to other factors (χ2 = 73.945 df = 4 p < 0.001).

5.1.1 Differences among participants

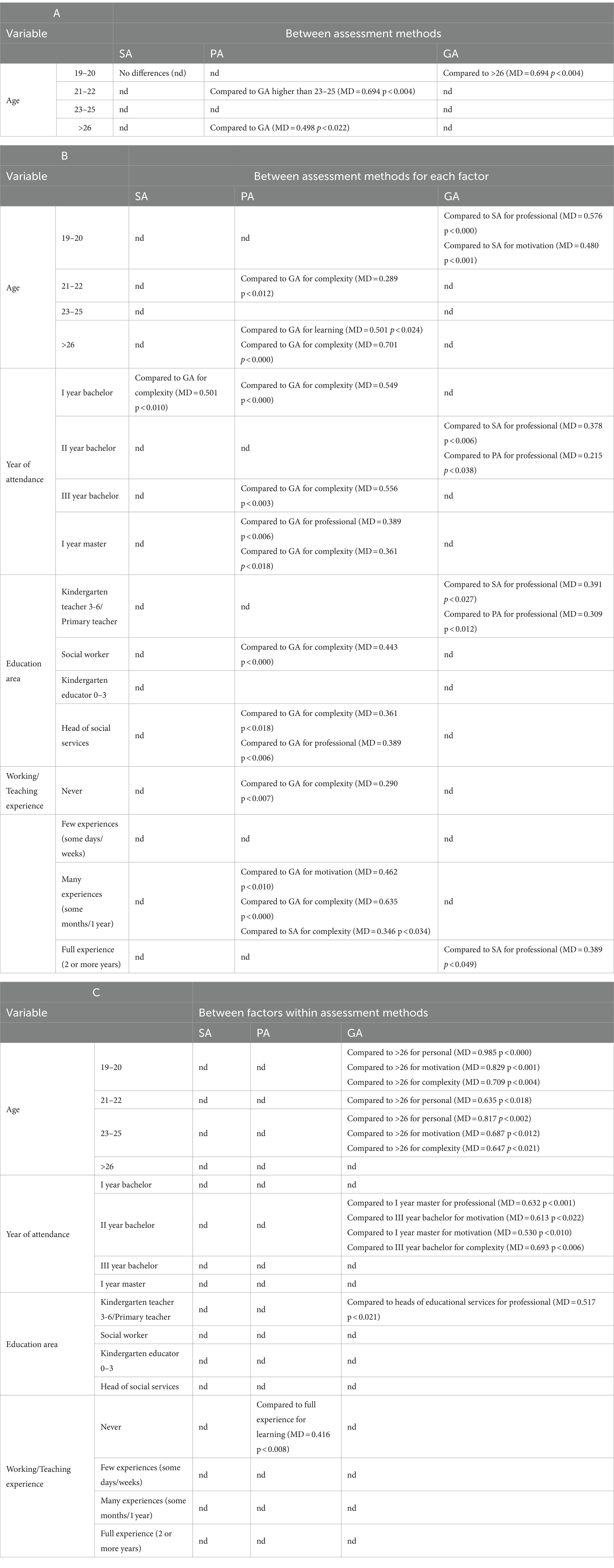

The differences between the demographic and educational characteristics of the participants were performed with ANOVA for repeated measures. Tables 6A–C summarize the results. The assessment methods are indicated with SA (self-assessment), PA (peer-assessment) and GA (group-assessment).

Table 6A presents the statistically significant differences between the variable ‘Age’ with its dimensions and each assessment method. Table 6B displays the differences between all variables with related dimensions and each factor comparing the assessment methods. Lastly, Table 6C reveals the differences between all variables with related dimensions and each factor within each assessment method.

Additionally, we found interesting differences regarding the importance of factors for some variables in general. Regarding the variable ‘Age’, the students 19–20 years old appreciated the factor ‘Complexity’ more than the factors ‘Personal’ and ‘Professional’ (respectively, MD = 0.501 p < 0.006 and MD = 0.621 p < 0.000). Again, the students 19–20 years old highly valued the factor ‘Motivation’ compared to the factor ‘Professional’ (MD = 0.436 p < 0.001). Lastly, the students older than 26 appreciated the factor ‘Complexity’ more than ‘Professional’ (MD = 0.685 p < 0.030).

Considering the variable ‘Year of attendance’, the students at the II year of bachelor degree gave higher scores to the factors ‘Complexity’ and ‘Motivation’ compared to ‘Professional’ (respectively, MD = 0.360 p < 0.036 and MD = 0.319 p < 0.014).

Within the variable ‘Education area’, pre-service teachers appreciated the factor ‘Complexity’ more than ‘Personal’ and ‘Professional’ (respectively, MD = 0.454 p < 0.037 and MD = 0.444 p < 0.034). Similarly, social workers appreciated ‘Complexity’ more than ‘Professional’ (MD = 0.501 p < 0.019).

Regarding the variable ‘Working/teaching experience’, students with ‘never’ work experiences, appreciated ‘Complexity’ more than ‘Professional’ (MD = 0.449 p < 0.005). Ultimately, students with ‘full experience’ appreciated ‘Complexity’ and ‘Motivation’ more than ‘Professional’ (respectively, MD = 0.542 p < 0.012 and MD = 0.444 p < 0.010).

5.1.2 Exploratory factor analysis (EFA)

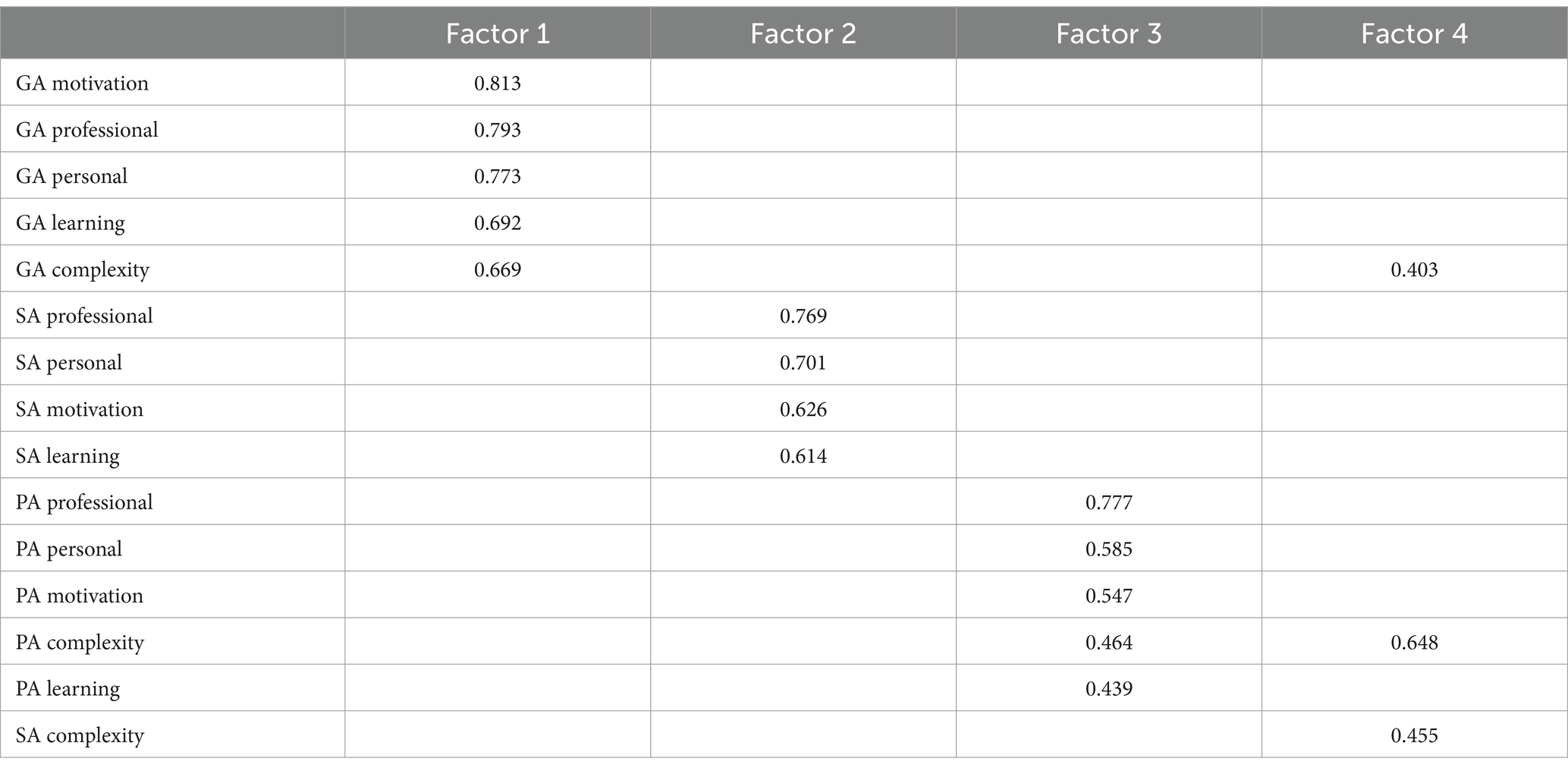

We decided to perform an Exploratory factor analysis (EFA) because it was interesting to identify potential common factors that might explain the structure of the instrument and the validity of the measured variables (Watkins, 2018). The EFA was completed with varimax rotation and Kaiser normalization, using principal components extraction, because we were interested in highlighting with eigenvalues > 1 to emphasize the presence of latent factors.

The results indicate that the sample was adequate since the Kaiser-Meyer-Olkin test was 0.844; additionally, Bartlett’s Test of Sphericity revealed a p-value of < 0.000 (χ2 = 1041.176; df = 105). Lastly, the goodness of fit test was 81.104 (df = 51; p < 0.005).

The EFA underscored four factors (see Table 7). Factors 1, 2 and 3 follow the structure of the instrument indicating, respectively, the group-assessment (38.86% of explained variance), the self-assessment (13.92%) and the peer-assessment (8.89%). The fourth factor indicated the importance of understanding the complexity of assessment procedures (7.22% of explained variance).

5.1.3 Next year

A specific questionnaire item asked students to indicate if, in their opinion, the formative activities should be repeated for students in the following year. This item was rated by the students on a four-point Likert scale, from 4 (Yes, absolutely) to 1 (Not at all). On this item, 97.51% of students chose options 4 and 3 for self-assessment, 94.12% for peer-assessment and 88.19% for group-assessment. The Friedman test did not show any statistically significant differences between the assessment methods (χ2 = 0.036 df = 2 p < 0.982).

5.2 Qualitative findings

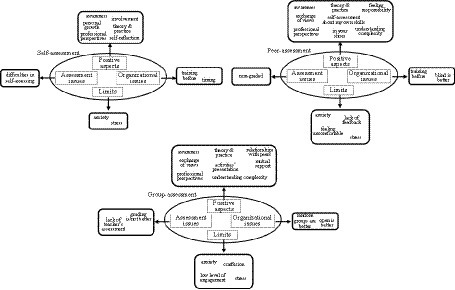

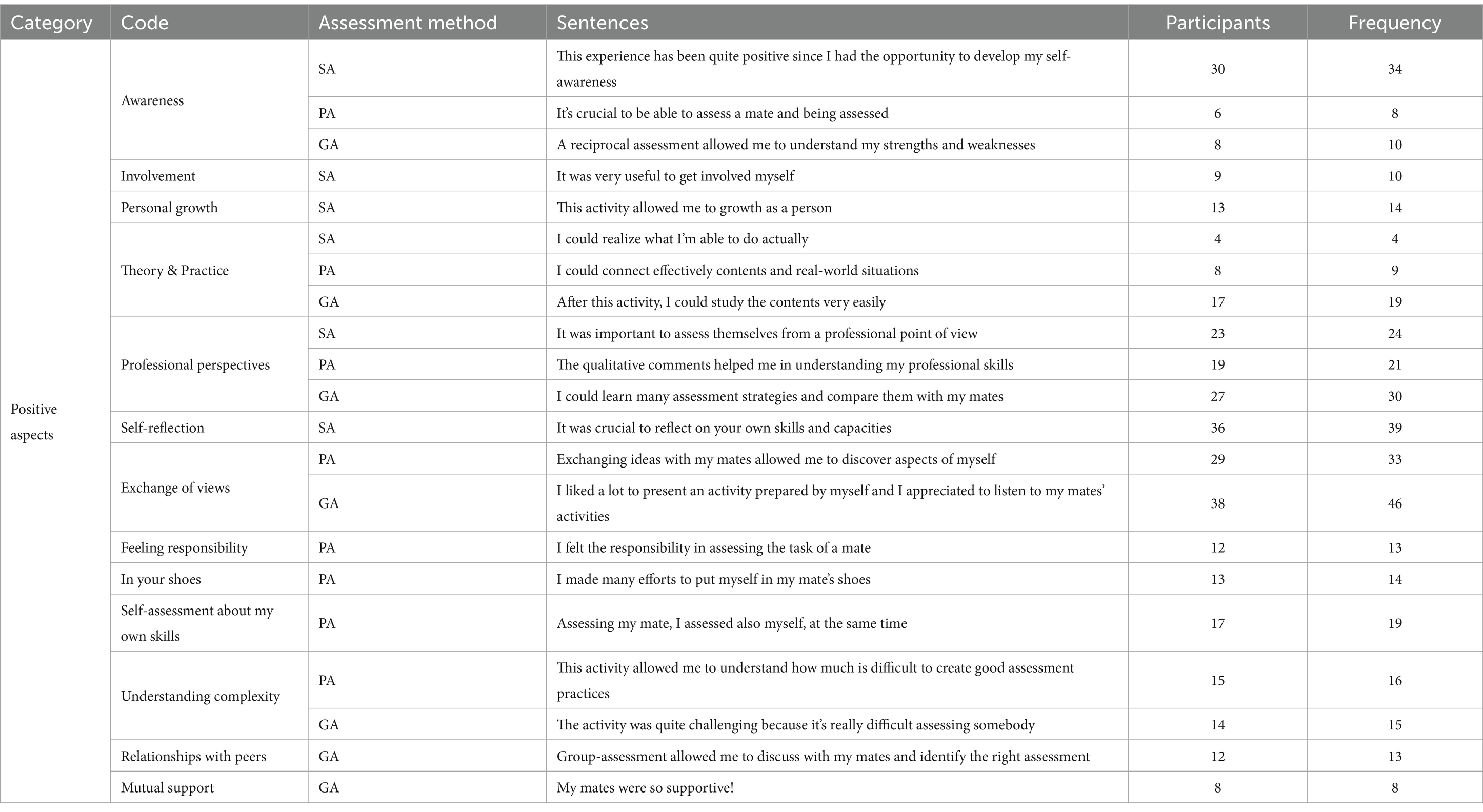

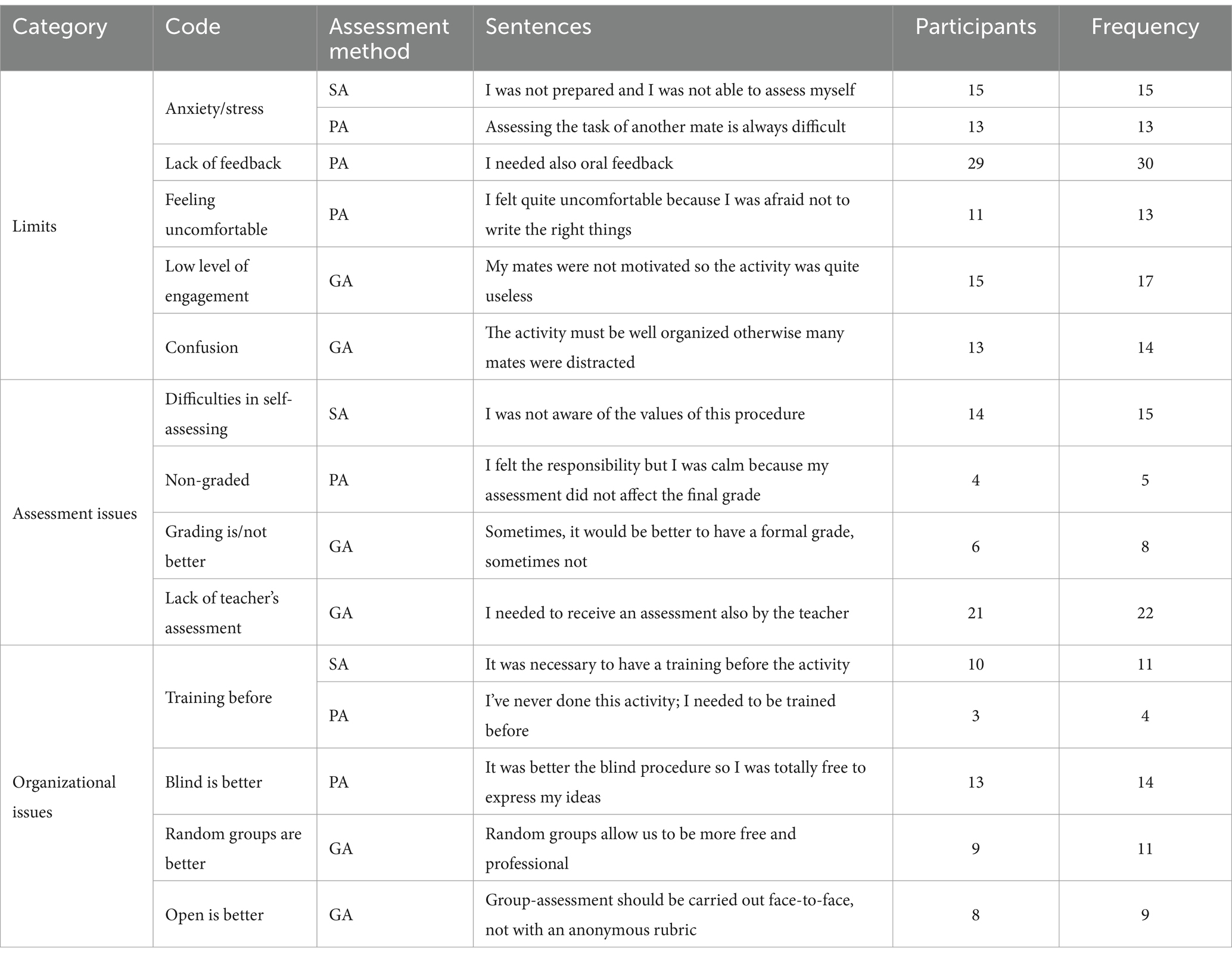

The qualitative data analysis highlighted four common categories for each assessment method: ‘Positive aspects’; ‘Limits’; ‘Assessment issues’ and ‘Organizational issues’. The first category includes codes related to positive characteristics of the assessment method, and the second category (Limits) contains codes that indicate the main weaknesses of the assessment method. The ‘Assessment issues’ category comprises codes focused on the forms/modalities of assessment. Lastly, the ‘Organizational issues’ category focuses on the technical questions related to the assessment method. As shown in Figure 1, categories with the same name can include codes shared between two or three assessment methods or specific codes that emphasize exclusive features of one assessment method.

Tables 8, 9 show in detail the categories and the codes split into the assessment methods. Additionally, these tables display examples of sentences which illustrate the codes, the number of participants who wrote sentences related to the code, and the frequency of the codes.

In addition to the information enclosed in Tables 8, 9, it is interesting to underline that 59 students considered the self-assessment activity ‘useful,’ whereas 51 students identified the peer-assessment activity and 44 students considered the group-assessment activity ‘very useful.’.

6 Discussion

Both the quantitative and qualitative findings of this study provide important insights about the use of formative assessment in the higher education contexts and indicate significant implications from a practical point of view.

First of all, comparing the three formative assessment strategies, peer- and group-assessment proved to be more appreciated among the students involved in the study. Also, self-assessment was evaluated as a positive activity but peer- and group-assessment allowed students to reflect deeply on their own learning processes, create feedback and give them the opportunity to improve and modify their learning strategies.

Among the five factors that characterized this study (Learning; Complexity; Personal; Professional; Motivation), two of them revealed their importance. The scores for “Complexity” (understanding complexity of assessment procedures) and “Motivation” (increasing my motivation to use formative assessment strategies in the future) were significantly higher than the other factors mainly for peer- and group-assessment activities. Opportunities to understand the complexity of assessment procedures and to increase motivation for future use of formative assessment strategies represented crucial elements of the formative assessment activities. These reasons are explained in the qualitative comments. Firstly, formative assessment methods allowed students to recognize that an assessment procedure is not simple and linear but it is composed of many connected components, both summative and formative. Consequently, students must be able to learn and design several assessment methods linked in a coherent way, as indicated by Ismail et al. (2022). Secondly, the use of formative assessment strategies in higher education increased the probability of using formative assessment techniques in the future, confirming the study carried out by Hamodi et al. (2017).

Regarding differences among participants, peer- and group assessment were more appreciated by older students, indicating that these students rely on forms of professional relationships to develop their own professional perspectives, as denoted by Biesma et al. (2019) and Montgomery et al. (2023). Peer-assessment is, in general, more appreciated than group-assessment for developing “Complexity,” “Motivation” and, also, “Learning” but group-assessment was more valued than peer- and self- assessment for increasing the “Professional” factor. This observation means that group-assessment represents an effective simulation of a work experience (Homayouni, 2022). The younger students appreciated group-assessment more than peer-assessment for the “Personal,” “Complexity” and “Motivation” factors, showing that younger students needed formative assessment activities for developing awareness of their own skills and capacities. Finally, students with no work experience appreciated the self-assessment activities for the factor “Learning,” revealing that they valued moments for reflecting on themselves.

The last quantitative finding arises from the exploratory factor analysis which confirmed that the factor related to understanding the complexity of assessment procedures is particularly significant for the participants.

The findings highlighted by the qualitative analysis reveal essential results of the study. Regarding the positive aspects, all three strategies helped students in growing their awareness in recognizing their learning processes, effectively connecting theory to practice, and consciously fostering their professional perspectives, as indicated by Tillema (2010). In particular, peer- and group-assessment boosted students’ capacity to exchange ideas and views with others; consequently, these strategies amplified peer relationships through responsibility to each other and deepened student understanding of the complexity of assessment procedures.

Additionally, the qualitative analysis identified three main limits in two aspects of formative assessment: lack of feedback in peer-assessment, and in group-assessment, low level of engagement and high level of confusion. Consequently, these two strategies must be carefully implemented. Even though they were highly appreciated by the students in general, the qualitative sections of the questionnaire indicate that peer-and group-assessment must be carried out in an adequate setting allowing students to express their ideas and motivations without pressure and confusion.

Lack of feedback, low level of engagement and high level of confusion represent three risks of formative assessment previously identified in the literature. The lack of feedback was already mentioned by Brown (2019) and the other two points were indicated by Crossouard and Pryor (2012).

The qualitative analysis also raised issues about the assessment quality and pinpointed crucial features related to the relationship between summative and formative assessment. Some students felt that group-assessment should be followed by a formal grade, while others indicated that the same activity should be carried out more informally. Regardless, all students felt the lack of an instructor-designed assessment to ensure that activities were well designed (Homayouni, 2022). These are fundamental points because the university teachers must decide if the formative assessment should affect and/or modify the final grade or not. This matter represents an open question that may also be influenced by the larger assessment culture (Doria et al., 2023). In this study, we decided that the formative assessment should not change the final grade because the formative strategies were focused on the metacognitive aspects.

From an organizational point of view, the students needed forms of training to effectively carry out both self- and peer-assessment. The organization of group-assessment was a particularly debated aspect because some students desired an open assessment discussion whilst other students wanted a completely blind assessment to avoid any conflict with their peers, as indicated by Hamodi et al. (2017).

7 Limitations of the study

This study presents some limitations. First, the participants are quite heterogeneous. They are all students enrolled in courses focused on educational contexts but the structure of the courses is different and this situation can affect the application of the assessment strategies. The second limitation is represented by the different topics faced in the different subjects. Even if the formative strategies can be considered valid for all subjects, it is necessary to reflect on the specificities of each subject to verify potential differences in managing and arranging the formative techniques. Finally, the study procedure lasted the whole academic year. It is likely that the elapsed time can represent a bias for students who faced three formative strategies throughout the course.

8 Conclusions and implications for policy and practice

Instead of assuming that ‘theory’ is solely the domain of expert educational researchers, we reassert our alignment with other researchers who recognize the critical significance of educational practitioners’ comprehension of theory in actualizing specific practices (Crossouard and Pryor, 2012). Our findings indicate that the participants in our study significantly enhanced their capacities in reflecting on their assessment competences.

Notably, the higher education students in this study understood the complexity of the assessment procedures (RQ #2) and were motivated to use multiple forms of assessment in their future work (RQ #4). In terms of learner-centred feedback on effectiveness (RQ #6), peer- and group-assessment strategies emerged as the most effective and productive methods from a formative point of view. As for RQ #5, we did not find specific differences in the use of formative assessment in the programs for pre-service teachers, social workers, and heads of social services. Furthermore, despite the different opinions expressed by the participants, the majority declared that it would be important to repeat the formative assessment activities with students in the educational profession programs the following academic year.

These conclusions and the findings have implications for future work in this area. The value of integrating formative assessment and learner-identified feedback into instructional practices is evident and will inform future studies and formative assessments, with some recommendations.

For future studies, our first recommendation is an emphasis on peer- assessment strategies. From a practical and organizational perspective, ways to strengthen the peer-assessment components, such as mandatory peer comments are one recommendation for avoiding a reported lack of feedback. In the present study, qualitative comments were optional and some students did not write any comments to their peers.

Our second recommendation further derives from the students who did not receive peer comments and emphasized that the rubric scores and indicators were not enough to express meaningful feedback. Supporting students in their development of effective peer feedback practices would be an important next step in strengthening this component of instruction and developing student competencies in formative assessment for the education professions.

A third recommendation is related to group assessment. To avoid low levels of engagement and high levels of confusion during group-assessment, additional measures are suggested. One option includes arranging random groups of students with the presence of one instructor in each group to ensure sufficient guidance during the activity. Following the activity, the instructor should leave the group to promote open discussion and support students’ assessment of the peer presentation, so that students can more freely and informally assess the learning. Then, the instructor will come back into the group to give a formal grade. We anticipate that this response could resolve issues of lack of teacher assessment and the question of assigning a formal evaluative grade to this activity, thereby effectively balancing summative and formative assessment methods (Lui and Andrade, 2022).

Typically, formative assessment is aimed at fostering developmental functions mainly related to metacognitive aspects of learning, whereas summative assessment is commonly targeted toward evaluative and administrative decisions (grades, reports, etc.) about performance (Baker, 2007). As the findings of this study reveal, we emphasize the main original contribution of this study: formative assessment is an effective combination of developmental and evaluative purposes.

We further recommend that future studies should investigate both (a) metacognitive steps to underline the formative values of assessment, and (b) evaluative steps to underscore the summative standards to be reached by a professional in education. This design will both align with the recent European Commision report (European Commission, Directorate-General for Education, Youth, Sport and Culture, et al., 2023) and support the creation of a strong combination of formative and summative feedback to deeply assess learners’ competences.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

Ethical approval was not required for the studies involving humans because this study strictly followed the principles of ethics. The participants were informed about the aims, the activities and the instruments designed for this study. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

DP: Conceptualization, Formal analysis, Investigation, Methodology, Project administration, Writing – original draft. EN: Formal analysis, Methodology, Writing – original draft. EM: Formal analysis, Methodology, Writing – original draft. MI: Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Andrade, H. L. (2019). A critical review of research on student self-assessment. Front. Educ. 4:87. doi: 10.3389/feduc.2019.00087

Andrade, H. G., and Boulay, B. A. (2003). Role of rubric-referenced self-assessment in learning to write. J. Educ. Res. 97, 21–30. doi: 10.1080/00220670309596625

Asghar, A. (2010). Reciprocal peer coaching and its use as a formative assessment strategy for first-year students. Assess. Eval. High. Educ. 35, 403–417. doi: 10.1080/02602930902862834

Baker, D. F. (2007). Peer assessment in small groups: a comparison of methods. J. Manag. Educ. 32, 183–209. doi: 10.1177/1052562907310489

Bawane, J., and Sharma, R. (2020). Formative assessments and the continuity of learning during emergencies and crises. NEQMAP 2020 thematic review. UNESCO.

Biesma, R., Kennedy, M.-C., Pawlikowska, T., Brugha, R., Conroy, R., and Doyle, F. (2019). Peer assessment to improve medical student’s contributions to team-based projects: randomized controlled trial and qualitative follow-up. BMC Med. Educ. 19:371. doi: 10.1186/s12909-019-1783-8

Bond, E., Woolcott, G., and Markopoulos, C. (2020). Why aren’t teachers using formative assessment? What can be done about it? Assess. Matters 14, 112–136. doi: 10.18296/am.0046

Brown, S. (1999). “Institutional strategies for assessment” in Assessment matters in higher education: Choosing and using diverse approaches. eds. S. Brown and A. Glasner (Buckingham: SRHE and Open University Press), 3–13.

Brown, G. T. (2019). Is assessment for learning really assessment? Front. Educ. 4:64. doi: 10.3389/feduc.2019.00064

Bruner, J. S. (1970). “Some theories on instruction” in Readings in educational psychology. ed. E. Stones (Methuen), 112–124.

Cefai, C., Downes, P., and Cavioni, V. (2021). A formative, inclusive, whole school approach to the assessment of social and emotional education in the EU. NESET report. Publications Office of the European Union. doi: 10.2766/905981

Clark, I. (2012). Formative assessment: assessment is for self-regulated learning. Educ. Psychol. Rev. 24, 205–249. doi: 10.1007/s10648-011-9191-6

Corbin, J., and Strauss, A. (2015). Basics of qualitative research: Techniques and procedures for developing grounded theory. Los Angeles, CA: Sage.

Creswell, J. W., and Clark, V. P. (2011). Designing and conducting mixed methods research Thousand Oaks: Sage.

Creswell, J. W., Clark, V. P., Gutmann, M., and Hanson, W. (2003). “Advanced mixed methods research designs” in Handbook of mixed methods in social & behavioral research. eds. A. Tashakkori and C. Teddlie (Thousand Oaks, CA: Sage), 209–240.

Crossouard, B., and Pryor, J. (2012). How theory matters: formative assessment theory and practices and their different relations to education. Stud. Philos. Educ. 31, 251–263. doi: 10.1007/s11217-012-9296-5

Dann, R. (2014). Assessment as learning: blurring the boundaries of assessment and learning for theory, policy and practice. Assess. Educ. Princ. Policy Pract. 21, 149–166. doi: 10.1080/0969594x.2014.898128

De Smet, M. M., Meganck, R., Van Nieuwenhove, K., Truijens, F. L., and Desmet, M. (2019). No change? A grounded theory analysis of depressed patients' perspectives on non-improvement in psychotherapy. Front. Psychol. 10:588. doi: 10.3389/fpsyg.2019.00588

Dekker, H., Schönrock-Adema, J., Snoek, J. W., van der Molen, T., and Cohen-Schotanus, J. (2013). Which characteristics of written feedback are perceived as stimulating students’ reflective competence: an exploratory study. BMC Med. Educ. 13, 1–7. doi: 10.1186/1472-6920-13-94

DeVon, H. A., Block, M. E., Moyle-Wright, P., Ernst, D. M., Hayden, S. J., Lazzara, D. J., et al. (2007). A psychometric toolbox for testing validity and reliability. J. Nurs. Scholarsh. 39, 155–164. doi: 10.1111/j.1547-5069.2007.00161.x

Doria, B., Grion, V., and Paccagnella, O. (2023). Assessment approaches and practices of university lecturers: a nationwide empirical research. Ital. J. Educ. Res. 30, 129–143. doi: 10.7346/sird-012023-p129

European Commission, Directorate-General for Education, Youth, Sport and CultureLooney, J., and Kelly, G. (2023). Assessing learners’ competences – Policies and practices to support successful and inclusive education – Thematic report, Publications Office of the European Union, 2023. Available at: https://data.europa.eu/doi/10.2766/221856

Evans, D. J., Zeun, P., and Stanier, R. A. (2013). Motivating student learning using a formative assessment journey. J. Anat. 224, 296–303. doi: 10.1111/joa.12117

Hadrill, R. (1995). “The NCVQ model of assessment at higher levels” in Assessment for learning in higher education. ed. P. Knight (London: Kogan Page), 167–179.

Hamodi, C., López-Pastor, V. M., and López-Pastor, A. T. (2017). If I experience formative assessment whilst studying at university, will I put it into practice later as a teacher? Formative and shared assessment in initial teacher education (ITE). Eur. J. Teach. Educ. 40, 171–190. doi: 10.1080/02619768.2017.1281909

Higgins, M., Grant, F., and Thompson, P. (2010). Formative assessment: balancing educational effectiveness and resource efficiency. J. Educ. Built Environ. 5, 4–24. doi: 10.11120/jebe.2010.05020004

Homayouni, M. (2022). Peer assessment in group-oriented classroom contexts: on the effectiveness of peer assessment coupled with scaffolding and group work on speaking skills and vocabulary learning. Lang. Test. Asia 12, 1–23. doi: 10.1186/s40468-022-00211-3

Ibarra-Sáiz, M. S., Rodríguez-Gómez, G., and Boud, D. (2020). The quality of assessment tasks as a determinant of learning. Assess. Eval. High. Educ. 46, 943–955. doi: 10.1080/02602938.2020.1828268

Ismail, S. M., Rahul, D. R., Patra, I., and Rezvani, E. (2022). Formative vs. summative assessment: impacts on academic motivation, attitude toward learning, test anxiety, and self-regulation skill. Lang. Test. Asia 12, 1–23. doi: 10.1186/s40468-022-00191-4

Jensen, L. X., Bearman, M., and Boud, D. (2023). Characteristics of productive feedback encounters in online learning. Teach. High. Educ. 1–15, 1–15. doi: 10.1080/13562517.2023.2213168

Kealey, E. (2010). Assessment and evaluation in social work education: formative and summative approaches. J. Teach. Soc. Work. 30, 64–74. doi: 10.1080/08841230903479557

Koka, R., Jurāne-Brēmane, A., and Koķe, T. (2017). Formative assessment in higher education: from theory to practice. Eur. J. Soc. Sci. Educ. Res. 9:28. doi: 10.26417/ejser.v9i1.p28-34

Leach, L., Neutze, G., and Zepke, N. (1998). “Motivation in assessment” in Motivating students. eds. S. Brown, S. Armstrong, and G. Thompson (London: Kogan Page), 201–209.

Lui, A. M., and Andrade, H. L. (2022). The next black box of formative assessment: a model of the internal mechanisms of feedback processing. Front. Educ. 7:751801. doi: 10.3389/feduc.2022.751548

Montgomery, L., MacDonald, M., and Houston, E. S. (2023). Developing and evaluating a social work assessment model based on co-production methods. Br. J. Soc. Work 53, 3665–3684. doi: 10.1093/bjsw/bcad154

Morris, R., Perry, T., and Wardle, L. (2021). Formative assessment and feedback for learning in higher education: a systematic review. Review of. Education 9, 1–26. doi: 10.1002/rev3.3292

Ng, E. M. W. (2016). Fostering pre-service teachers’ self-regulated learning through self- and peer assessment of wiki projects. Comput. Educ. 98, 180–191. doi: 10.1016/j.compedu.2016.03.015

Nicol, D. J., and Macfarlane-Dick, D. (2006).” Formative assessment and self-regulated learning: a model and seven principles of good feedback practice. Stud. High. Educ. 31, 199–218. doi: 10.1080/03075070600572090

Ozan, C., and Kıncal, R. Y. (2018). The effects of formative assessment on academic achievement, attitudes toward the lesson, and self-regulation skills. Educ. Sci. Theory Pract. 18, 85–118. doi: 10.12738/estp.2018.1.0216

Panadero, E., Brown, G. T., and Strijbos, J.-W. (2015). The future of student self-assessment: a review of known unknowns and potential directions. Educ. Psychol. Rev. 28, 803–830. doi: 10.1007/s10648-015-9350-2

Pereira, D., Flores, M. A., and Niklasson, L. (2015). Assessment revisited: a review of research in assessment and evaluation in higher education. Assess. Eval. High. Educ. 41, 1008–1032. doi: 10.1080/02602938.2015.1055233

Pintrich, P. R., and Zusho, A. (2002). “The development of academic self-regulation: the role of cognitive and motivational factors” in Development of achievement motivation. eds. A. Wigfield and J. S. Eccles (San Diego: Academic Press), 249–284.

Spiliotopoulou, G. (2009). Reliability reconsidered: Cronbach’s alpha and paediatric assessment in occupational therapy. Aust. Occup. Ther. J. 56, 150–155. doi: 10.1111/j.1440-1630.2009.00785.x

Suhoyo, Y., Van Hell, E. A., Kerdijk, W., Emilia, O., Schönrock-Adema, J., Kuks, J. B., et al. (2017). Influence of feedback characteristics on perceived learning value of feedback in clerkships: does culture matter? BMC Med. Educ. 17, 1–7. doi: 10.1186/s12909-017-0904-5

Tashakkori, A., and Teddlie, C. (2009). Foundations of mixed methods research. London: SAGE Publications.

Tillema, H. (2010). “Formative Assessment in Teacher Education and Teacher Professional Development,” in International Encyclopedia of Education. eds. Peterson, P., Baker, E., and McGaw, B.. 3rd edn (Oxford: Elsevier), 563–571.

van Gennip, N. A. E., Segers, M. S. R., and Tillema, H. H. (2010). Peer assessment as a collaborative learning activity: the role of interpersonal variables and conceptions. Learn. Instr. 20, 280–290. doi: 10.1016/j.learninstruc.2009.08.010

Wanner, T., and Palmer, E. (2018). Formative self-and peer assessment for improved student learning: the crucial factors of design, teacher participation and feedback. Assess. Eval. High. Educ. 43, 1032–1047. doi: 10.1080/02602938.2018.1427698

Watkins, M. W. (2018). Exploratory factor analysis: a guide to best practice. J. Black Psychol. 44, 219–246. doi: 10.1177/0095798418771807

Watson, G. P. L., and Kenny, N. (2014). Teaching critical reflection to graduate students. Collected Essays Learn. Teach. 7:56. doi: 10.22329/celt.v7i1.3966

Xiao, Y., and Yang, M. (2019). Formative assessment and self-regulated learning: how formative assessment supports students’ self-regulation in English language learning. System 81, 39–49. doi: 10.1016/j.system.2019.01.004

Yin, S., Chen, F., and Chang, H. (2022). Assessment as learning: how does peer assessment function in students’ learning? Front. Psychol. 13:912568. doi: 10.3389/fpsyg.2022.912568

Keywords: formative assessment, professionals in education, higher education, teacher education, social workers

Citation: Parmigiani D, Nicchia E, Murgia E and Ingersoll M (2024) Formative assessment in higher education: an exploratory study within programs for professionals in education. Front. Educ. 9:1366215. doi: 10.3389/feduc.2024.1366215

Edited by:

Luisa Losada-Puente, University of A Coruña, SpainReviewed by:

Brett Bligh, Lancaster University, United KingdomAgnieszka Szplit, Jan Kochanowski University, Poland

Copyright © 2024 Parmigiani, Nicchia, Murgia and Ingersoll. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Davide Parmigiani, davide.parmigiani@unige.it

Davide Parmigiani

Davide Parmigiani Elisabetta Nicchia

Elisabetta Nicchia Emiliana Murgia

Emiliana Murgia Marcea Ingersoll

Marcea Ingersoll